1. 架构篇 1.1 kubernetes 架构说明

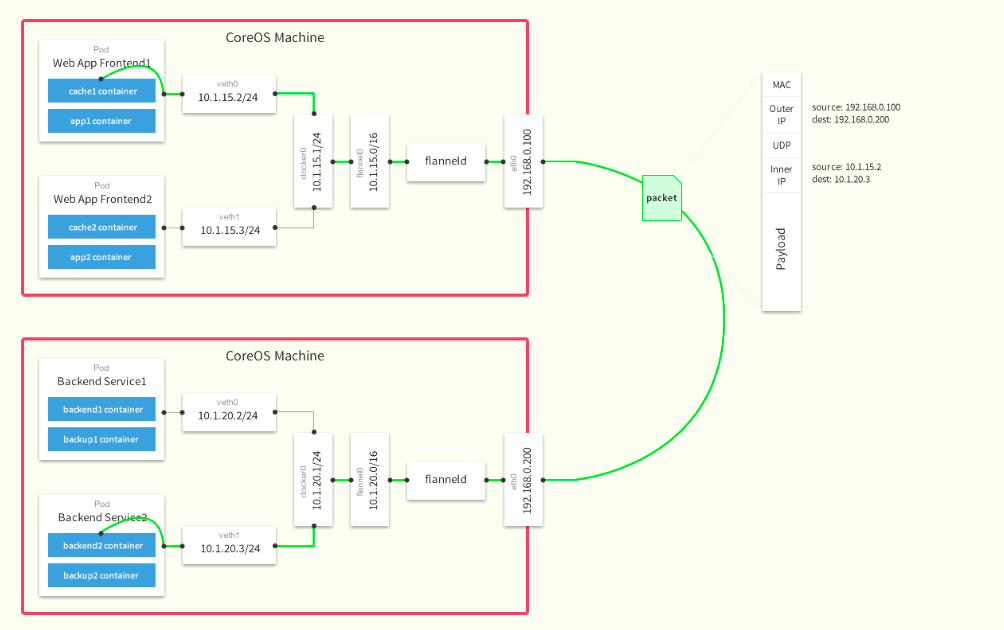

1.2 Flannel网络架构图

集群功能各模块功能描述: Master节点:

Node节点:

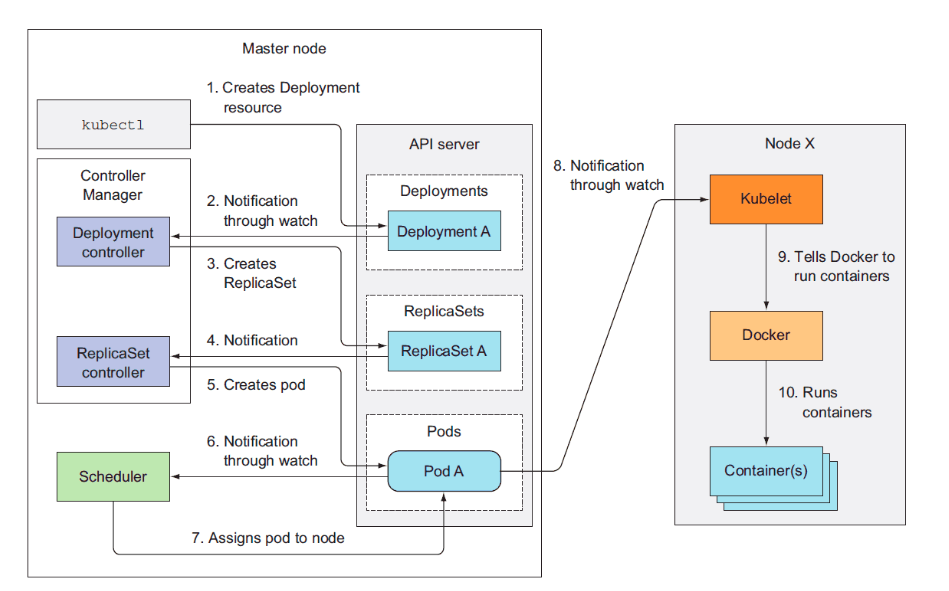

1.3 Kubernetes工作流程

2. 环境说明 2.1 部署节点说明

主机名

IP

用途

部署软件

k8s-1

192.168.123.110

master

apiserver,scheduler,controller-manager etcd,flanneld

k8s-2

192.168.123.120

node

kubelet,kube-proxy etcd,flanneld

k8s-3

192.168.123.130

node

kubelet,kube-proxy etcd,flanneld

2.2 操作系统环境 1 2 3 4 5 6 7 8 9 10 11 12 [root@k8s-1 /root] master # uname -r3.10 .0 -957 .el7.x86_64[root@k8s-1 /root] master # cat /etc/redhat-releaseCentOS Linux release 7.6 .1810 (Core) [root@k8s-1 /root] master # sestatus SELinux status: disabled

2.3 软件包版本

3. Kubernetes 安装及配置 3.1 初始化环境 3.1.1 设置关闭防火墙及SELINUX 1 2 3 4 systemctl stop firewalld && systemctl disable firewalld setenforce 0 vi /etc/selinux/config SELINUX=disabled

3.1.2 关闭swap 1 2 swapoff -a && sysctl -w vm.swappiness=0 sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

3.1.3 设置Docker所需参数 1 2 3 4 5 6 7 8 9 10 11 12 cat > /etc/sysctl.d/kubernetes.conf <<EOF net.ipv4.ip_forward=1 net.ipv4.tcp_tw_recycle=0 vm.swappiness=0 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.ipv6.conf.all.disable_ipv6=1 EOF sysctl -p /etc/sysctl.d/kubernetes.conf

3.1.4 安装 Docker 1 2 3 4 5 6 7 8 9 10 # 配置yum源 cd /etc/yum.repos.d/ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum clean all && yum repolist -y # 安装docker ,版本 18.06.2 yum install docker-ce-18.06.2.ce-3.el7 -y # 启动 systemctl start docker && systemctl enable docker

3.1.5 创建相关目录 1 2 3 4 5 6 7 # 创建安装包存储目录 mkdir /data/{install,ssl_config} -p mkdir /data/ssl_config/{etcd,kubernetes} -p # 创建安装目录 mkdir /cloud/k8s/etcd/{bin,cfg,ssl} -p mkdir /cloud/k8s/kubernetes/{bin,cfg,ssl} -p

3.1.6 SSH 互信配置 1 2 3 4 5 6 7 8 9 10 添加hoosts解析 cat >> /etc/hosts << EOF 192.168.220.110 k8s-1 192.168.220.120 k8s-2 192.168.220.130 k8s-3 EOF ssh-keygen ssh-copy-id k8s-1 ssh-copy-id k8s-2 ssh-copy-id k8s-3

3.1.7 添加 PATH 变量 1 2 3 4 5 6 7 cat >> /etc/profile << EOF export PATH=$PATH:/cloud/k8s/etcd/bin/:/cloud/k8s/kubernetes/bin/ EOF cat >> /etc/profile.d/k8s.sh NODE_IP=192.168.220.110 #当前节点ip EOF

3.2 创建ssl证书 3.2.1 安装及配置CFSSL 1 2 3 4 5 6 7 wget https:// pkg.cfssl.org/R1.2/ cfssl_linux-amd64 wget https:// pkg.cfssl.org/R1.2/ cfssljson_linux-amd64 wget https:// pkg.cfssl.org/R1.2/ cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/ local/bin/ cfssl mv cfssljson_linux-amd64 /usr/ local/bin/ cfssljson mv cfssl-certinfo_linux-amd64 /usr/ bin/cfssl-certinfo

3.2.2 创建 ETCD 相关证书 以下操作均在/data/ssl_config/etcd/目录中etcd证书ca配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 cd /data/ ssl_config/etcd/ cat << EOF | tee ca-config.json { "signing" : { "default" : { "expiry" : "876000h" }, "profiles" : { "www" : { "expiry" : "876000h" , "usages" : [ "signing" , "key encipherment" , "server auth" , "client auth" ] } } } } EOF

创建 ETCD CA 配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 cat << EOF | tee ca-csr .json{ "CN" : "etcd CA" , "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "Beijing" , "ST" : "Beijing" } ] } EOF

创建 ETCD Server 证书

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 cat << EOF | tee server -csr.json { "CN": "etcd", "hosts": [ "k8s-3", "k8s-2", "k8s-1", "192.168.220.110", "192.168.220.120", "192.168.220.130" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOF

生成 ETCD CA 证书和私钥

1 2 3 4 5 6 7 8 cd /data/ssl_config/etcd/ cfssl gencert -initca ca-csr.json | cfssljson -bare ca - cfssl gencert -ca =ca.pem \ -ca-key =ca-key.pem \-config =ca-config.json \-profile =www server-csr.json | cfssljson -bare server

3.2.3 创建 Kubernetes 相关证书 以下操作均在/data/ssl_config/kubernetes/目录中kubernetes 证书ca配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 cd /data/ ssl_config/kubernetes/ cat << EOF | tee ca-config.json { "signing" : { "default" : { "expiry" : "876000h" }, "profiles" : { "kubernetes" : { "expiry" : "876000h" , "usages" : [ "signing" , "key encipherment" , "server auth" , "client auth" ] } } } } EOF

创建ca证书配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 cat << EOF | tee ca-csr .json{ "CN" : "kubernetes" , "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "Beijing" , "ST" : "Beijing" , "O" : "k8s" , "OU" : "System" } ] } EOF

生成API_SERVER证书

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 cat << EOF | tee server -csr.json { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "192.168.220.110", "k8s-1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] } EOF

创建 Kubernetes Proxy 证书

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 cat << EOF | tee kube-proxy-csr .json{ "CN" : "system:kube-proxy" , "hosts" : [], "key" : { "algo" : "rsa" , "size" : 2048 }, "names" : [ { "C" : "CN" , "L" : "Beijing" , "ST" : "Beijing" , "O" : "k8s" , "OU" : "System" } ] } EOF

生成 kubernetes CA 证书和私钥

1 2 3 4 5 6 cfssl gencert -initca ca-csr.json | cfssljson -bare ca - cfssl gencert -ca =ca.pem -ca-key =ca-key.pem -config =ca-config.json -profile =kubernetes server-csr.json | cfssljson -bare server cfssl gencert -ca =ca.pem -ca-key =ca-key.pem -config =ca-config.json -profile =kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

3.3 部署etcd 3.3.1 配置软件包 1 2 3 4 cd /data/i nstall/ tar -xvf etcd-v3.3.10 -linux-amd64.tar.gz cd etcd-v3.3.10 -linux-amd64/ cp etcd etcdctl /cloud/ k8s/etcd/ bin/

3.3.2 编辑etcd配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 vim /cloud/k8s/etcd/cfg/etcd NODE_IP =192.168.220.110ETCD_NAME ="etcd01" ETCD_DATA_DIR ="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS ="https://${NODE_IP} :2380" ETCD_LISTEN_CLIENT_URLS ="https://${NODE_IP} :2379" ETCD_INITIAL_ADVERTISE_PEER_URLS ="https://${NODE_IP} :2380" ETCD_ADVERTISE_CLIENT_URLS ="https://${NODE_IP} :2379" ETCD_INITIAL_CLUSTER ="etcd01=https://192.168.220.110:2380,etcd02=https://192.168.220.120:2380,etcd03=https://192.168.220.130:2380" ETCD_INITIAL_CLUSTER_TOKEN ="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE ="new"

3.3.3 创建 etcd的 systemd unit 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 vim /usr/lib/systemd/system/etcd.service [Unit] Description =Etcd Server After =network.targetAfter =network-online.targetWants =network-online.target[Service] Type =notifyExecStart =/usr/k8s/bin/etcd \--name =${ETCD_NAME} \--data-dir =${ETCD_DATA_DIR} \--listen-peer-urls =${ETCD_LISTEN_PEER_URLS} \--listen-client-urls =${ETCD_LISTEN_CLIENT_URLS} ,http://127.0.0.1:2379 \--advertise-client-urls =${ETCD_ADVERTISE_CLIENT_URLS} \--initial-advertise-peer-urls =${ETCD_INITIAL_ADVERTISE_PEER_URLS} \--initial-cluster =${ETCD_INITIAL_CLUSTER} \--initial-cluster-token =${ETCD_INITIAL_CLUSTER_TOKEN} \--initial-cluster-state =new \--cert-file =/cloud/k8s/etcd/ssl/server.pem \--key-file =/cloud/k8s/etcd/ssl/server-key.pem \--peer-cert-file =/cloud/k8s/etcd/ssl/server.pem \--peer-key-file =/cloud/k8s/etcd/ssl/server-key.pem \--trusted-ca-file =/cloud/k8s/etcd/ssl/ca.pem \--peer-trusted-ca-file =/cloud/k8s/etcd/ssl/ca.pemRestart =on-failureLimitNOFILE =65536[Install] WantedBy =multi-user.target

3.3.4 配置证书文件 1 2 cd /data/ ssl_config/etcd/ cp ca*pem server*pem /cloud/ k8s/etcd/ ssl

3.3.5 配置文件拷贝到 节点1、节点2 1 2 3 4 5 cd /cloud/k8s/ scp -r etcd k8s-2 :/cloud/k8s/ scp -r etcd k8s-3 :/cloud/k8s/ scp /usr/lib/systemd/system/etcd.service k8s-2 :/usr/lib/systemd/system/etcd .service scp /usr/lib/systemd/system/etcd.service k8s-3 :/usr/lib/systemd/system/etcd .service

修改另外两台机器配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 cat > /cloud/k8s/etcd/cfg/etcd << EOF ETCD_NAME ="etcd02" ETCD_DATA_DIR ="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS ="https://${NODE_IP} :2380" ETCD_LISTEN_CLIENT_URLS ="https://${NODE_IP} :2379" ETCD_INITIAL_ADVERTISE_PEER_URLS ="https://${NODE_IP} :2380" ETCD_ADVERTISE_CLIENT_URLS ="https://${NODE_IP} :2379" ETCD_INITIAL_CLUSTER ="etcd01=https://192.168.220.110:2380,etcd02=https://192.168.220.120:2380,etcd03=https://192.168.220.130:2380" ETCD_INITIAL_CLUSTER_TOKEN ="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE ="new" EOF cat > /cloud/k8s/etcd/cfg/etcd << EOF NODE_IP =192.168.220.130ETCD_NAME ="etcd03" ETCD_DATA_DIR ="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS ="https://${NODE_IP} :2380" ETCD_LISTEN_CLIENT_URLS ="https://${NODE_IP} :2379" ETCD_INITIAL_ADVERTISE_PEER_URLS ="https://192.168.220.130:2380" ETCD_ADVERTISE_CLIENT_URLS ="https://192.168.220.130:2379" ETCD_INITIAL_CLUSTER ="etcd01=https://192.168.220.110:2380,etcd02=https://192.168.220.120:2380,etcd03=https://192.168.220.130:2380" ETCD_INITIAL_CLUSTER_TOKEN ="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE ="new" EOF

另外两台机器配置完成后,启动etcd服务,三台都需启动。

3.3.6 启动ETCD服务 1 2 3 4 system ctl daemon-reloadsystem ctl enable etcdsystem ctl start etcd

3.3.7 检查服务是否正常 1 2 3 4 5 6 [root@k8s -1 /cloud/k8s/etcd/bin] master etcdctl --ca-file=/cloud/ k8s/etcd/ssl/ca.pem --cert-file=/cloud/ k8s/etcd/ssl/server.pem --key-file=/cloud/ k8s/etcd/ssl/server-key.pem --endpoints="https://192.168.220.110:2379,https://192.168.220.120:2379,https://192.168.220.130:2379" cluster-health member 4c6bfb873a73368c is healthy : got healthy result from https : member 622f71dbd55b34ce is healthy : got healthy result from https : member f14004aa5403b07d is healthy : got healthy result from https : cluster is healthy

3.4 部署Flannel网络 3.4.1 向 etcd 写入集群 Pod 网段信息 1 2 3 4 5 cd cd /cloud/k8s/etcd/ssl/ etcdctl --ca-file=ca .pem --cert-file=server .pem --key-file=server-key .pem \ --endpoints= "https://192.168.220.110:2379,https://192.168.220.120:2379,https://192.168.220.130:2379" \set /coreos.com/network/config \'{ "Network" : "172.18.0.0/16" , "Backend" : {"Type" : "vxlan" }}'

1 写入的 Pod 网段 ${CLUSTER_CIDR} 必须是 /16 段地址,必须与 kube-controller-manager 的 --cluster-cidr 参数值一致;

3.4.2 部署 flannel 1 2 3 cd /data/i nstall/ tar xf flannel-v0.11.0 -linux-amd64.tar.gz mv flanneld mk-docker-opts.sh /cloud/ k8s/kubernetes/ bin/

3.5.3 配置flannel 1 2 vim /cloud/ k8s/kubernetes/ cfg/flanneld FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.220.110:2379,https://192.168.220.120:2379,https://192.168.220.130:2379 -etcd-cafile=/cloud/k8s/etcd/ssl/ca.pem -etcd-certfile=/cloud/k8s/etcd/ssl/server.pem -etcd-keyfile=/cloud/k8s/etcd/ssl/server-key.pem"

3.5.4 创建 flanneld 的 systemd unit 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 vim /usr/ lib/systemd/ system/flanneld.service [Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/cloud/ k8s/kubernetes/ cfg/flanneld ExecStart=/cloud/ k8s/kubernetes/ bin/flanneld --ip-masq $FLANNEL_OPTIONS ExecStartPost=/cloud/ k8s/kubernetes/ bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d / run/flannel/ subnet.env Restart=on-failure [Install] WantedBy=multi-user.target

1 mk-docker-opts.sh 脚本将分配给 flanneld 的 Pod 子网网段信息写入 /run/ flannel/docker 文件,后续 docker 启动时 使用这个文件中的环境变量配置 docker0 网桥;

flanneld 使用系统缺省路由所在的接口与其它节点通信,对于有多个网络接口(如内网和公网)的节点,可以用 -iface 参数指定通信接口,如上面的 eth0 接口;

3.5.5 配置Docker启动指定子网段 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 vim /usr/lib/systemd/system/docker.service [Unit] Description =Docker Application Container EngineDocumentation =https://docs.docker.comAfter =network-online.target firewalld.serviceWants =network-online.target[Service] Type =notifyEnvironmentFile =/run/flannel/subnet.envExecStart =/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS ExecReload =/bin/kill -s HUP $MAINPID LimitNOFILE =infinityLimitNPROC =infinityLimitCORE =infinityTimeoutStartSec =0Delegate =yes KillMode =processRestart =on-failureStartLimitBurst =3StartLimitInterval =60s[Install] WantedBy =multi-user.target

3.5.6 将flanneld systemd unit 文件到所有节点 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 cd /cloud/k8s/ scp -r kubernetes k8s-2 :/cloud/k8s/ scp -r kubernetes k8s-3 :/cloud/k8s/ scp /cloud/k8s/kubernetes/cfg/flanneld k8s-2 :/cloud/k8s/kubernetes/cfg/flanneld scp /cloud/k8s/kubernetes/cfg/flanneld k8s-3 :/cloud/k8s/kubernetes/cfg/flanneld scp /usr/lib/systemd/system/docker.service k8s-2 :/usr/lib/systemd/system/docker .service scp /usr/lib/systemd/system/docker.service k8s-3 :/usr/lib/systemd/system/docker .service scp /usr/lib/systemd/system/flanneld.service k8s-2 :/usr/lib/systemd/system/flanneld .service scp /usr/lib/systemd/system/flanneld.service k8s-3 :/usr/lib/systemd/system/flanneld .service systemctl daemon-reload systemctl start flanneld systemctl enable flanneld systemctl restart docker

3.5.7 验证fannel网络配置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@k8s-1 /data/install] master 1 : lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00 :00 :00 :00 :00 :00 brd 00 :00 :00 :00 :00 :00 inet 127.0 .0.1 /8 scope host lo valid_lft forever preferred_lft forever 2 : eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00 :0 c:29 :33 :de:53 brd ff:ff:ff:ff:ff:ff inet 192.168 .123.211 /24 brd 192.168 .123.255 scope global noprefixroute eth0 valid_lft forever preferred_lft forever 3 : flannel.1 : <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 1 a:6 f:5 a:0 b:c7:18 brd ff:ff:ff:ff:ff:ff inet 172.18 .30.0 /32 scope global flannel.1 valid_lft forever preferred_lft forever 4 : docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02 :42 :5 c:d0:df:e6 brd ff:ff:ff:ff:ff:ff inet 172.18 .30.1 /24 brd 172.18 .30.255 scope global docker0 valid_lft forever preferred_lft forever

3.5 部署 master 节点

kubernetes master 节点运行如下组件:

3.5.1 配置 master 节点文件 1 2 3 4 cd /data/i nstall/ tar xf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/ bin/ cp kube-scheduler kube-apiserver kube-controller-manager kubectl /cloud/ k8s/kubernetes/ bin/

3.5.2 配置 kubernetes相关证书 1 2 cd /data/ ssl_config/kubernetes/ cp *pem /cloud/ k8s/kubernetes/ ssl/

3.5.3 部署 kube-apiserver 组件 创建 TLS Bootstrapping Token

1 2 3 4 5 # head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 619bd8a5bcfc6bcde109d710d9a02cc4 # vim /cloud/k8s/kubernetes/cfg/token.csv 619bd8a5bcfc6bcde109d710d9a02cc4,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

创建apiserver配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 > - << -- -- -- - . . . , . . . , . . . -- - . . . -- - -- - . . . -- - -- - - - . . . -- - - -- - - , , , , , -- - , -- - - - -- - - . -- - - - - -- - - . -- - - - - . -- - - . -- - - - - . -- - . -- - . -- - - .

创建 kube-apiserver systemd unit 文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 cat > /etc/systemd/system/kube-apiserver.service << EOF [Unit] Description =Kubernetes API Server Documentation =https://github.com/kubernetes/kubernetes[Service] ExecStart =/usr/k8s/bin/kube-apiserver \\--logtostderr =true \\--v =4 \\--etcd-servers =${ETCD_ENDPOINTS} \\--bind-address =${NODE_IP} \\--secure-port =6443 \\--advertise-address =${NODE_IP} \\--allow-privileged =true \\--service-cluster-ip-range =${SERVICE_CIDR} \\--insecure-bind-address =${NODE_IP} \\--enable-admission-plugins =NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \\--authorization-mode =RBAC,Node \\--enable-bootstrap-token-auth \\ --token-auth-file =/etc/kubernetes/token.csv \\--service-node-port-range =30000-50000 \\--tls-cert-file =/etc/kubernetes/ssl/kubernetes.pem \\--tls-private-key-file =/etc/kubernetes/ssl/kubernetes-key.pem \\--client-ca-file =/etc/kubernetes/ssl/ca.pem \\--service-account-key-file =/etc/kubernetes/ssl/ca-key.pem \\--etcd-cafile =/etc/kubernetes/ssl/ca.pem \\--etcd-certfile =/etc/kubernetes/ssl/kubernetes.pem \\--etcd-keyfile =/etc/kubernetes/ssl/kubernetes-key.pemRestart =on-failure[Install] WantedBy =multi-user.targetEOF

启动服务

1 2 3 system ctl daemon-reloadsystem ctl enable kube-apiserversystem ctl restart kube-apiserver

查看apiserver是否运行

1 2 3 4 [root@k8s-1 /data/ ssl_config/kubernetes] master # ps -ef |grep kube-apiserver root 5475 1 2 7 月30 ? 01 :57 :41 /cloud/ k8s/kubernetes/ bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https:/ /192.168.123.211:2379,https:/ /192.168.123.212:2379,https:/ /192.168.123.213:2379 --bind-address=192.168.123.211 --secure-port=6443 --advertise-address=192.168.123.211 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/ 24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --enable-bootstrap-token-auth --token-auth-file =/cloud/ k8s/kubernetes/ cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/ cloud/k8s/ kubernetes/ssl/ server.pem --tls-private -key-file =/cloud/ k8s/kubernetes/ ssl/server-key.pem --client-ca-file=/ cloud/k8s/ kubernetes/ssl/ ca.pem --service-account-key-file =/cloud/ k8s/kubernetes/ ssl/ca-key.pem --etcd-cafile=/ cloud/k8s/ etcd/ssl/ ca.pem --etcd-certfile=/cloud/ k8s/etcd/ ssl/server.pem --etcd-keyfile=/ cloud/k8s/ etcd/ssl/ server-key.pem root 6886 2930 0 19 :49 pts/0 00 :00 :00 grep --color=auto kube-apiserver

3.5.4 部署kube-scheduler 创建kube-scheduler配置文件

1 2 vim /cloud/ k8s/kubernetes/ cfg/kube-scheduler KUBE_SCHEDULER_OPTS=""

1 --address :在 127.0.0.1:10251 端口接收 http /metrics 请求;kube-scheduler 目前还不支持接收 https 请求;

–kubeconfig:指定 kubeconfig 文件路径,kube-scheduler 使用它连接和验证 kube-apiserver;

创建kube-scheduler systemd unit 文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 cat > /etc/systemd/system/kube-scheduler.service << EOF [Unit] Description =Kubernetes Scheduler Documentation =https://github.com/kubernetes/kubernetes[Service] ExecStart =/usr/k8s/bin/kube-scheduler \\--logtostderr =true \\--v =4 \\--address =127.0.0.1 \\--master =${MASTER_URL} :8080 \\--leader-elect Restart =on-failure[Install] WantedBy =multi-user.targetEOF

启动kube-scheduler服务

1 2 3 system ctl daemon-reload system ctl enable kube-scheduler.service system ctl restart kube-scheduler.service

查看kube-scheduler是否运行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 [root@k8s-1 /data/ssl_config/kubernetes] master # ps -ef |grep kube-scheduler root 7269 2930 0 19 :54 pts/0 00 :00 :00 grep --color=auto kube-scheduler root 13970 1 0 8 月02 ? 00 :01 :21 /cloud/k8s/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1 :8080 --leader-elect [root@k8s-1 /data/ssl_config/kubernetes] master # systemctl status kube-scheduler ● kube-scheduler.service - Kubernetes Scheduler Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service Active: active (running) since 五 2019-08-02 18 :33 :11 CST Docs: https://github.com/kubernetes/kubernetes Main PID: 13970 (kube-scheduler) Tasks: 10 Memory: 19 .1 M CGroup: /system.slice/kube-scheduler.service └─13970 /cloud/k8s/kubernetes/bin/kube-scheduler --logtostderr=tru... 8 月 03 19:46:52 k8s-1 kube-scheduler[13970 ]: I0803 19:46 :52.580662 13970 ...d8 月 03 19:47:03 k8s-1 kube-scheduler[13970 ]: I0803 19:47 :03.578985 13970 ...d8 月 03 19:47:03 k8s-1 kube-scheduler[13970 ]: I0803 19:47 :03.583437 13970 ...d8 月 03 19:48:31 k8s-1 kube-scheduler[13970 ]: I0803 19:48 :31.637472 13970 ...d8 月 03 19:48:48 k8s-1 kube-scheduler[13970 ]: I0803 19:48 :48.617124 13970 ...d8 月 03 19:50:00 k8s-1 kube-scheduler[13970 ]: I0803 19:50 :00.589103 13970 ...d8 月 03 19:50:18 k8s-1 kube-scheduler[13970 ]: I0803 19:50 :18.616574 13970 ...d8 月 03 19:52:15 k8s-1 kube-scheduler[13970 ]: I0803 19:52 :15.629976 13970 ...d8 月 03 19:52:45 k8s-1 kube-scheduler[13970 ]: I0803 19:52 :45.580103 13970 ...d8 月 03 19:53:56 k8s-1 kube-scheduler[13970 ]: I0803 19:53 :56.601687 13970 ...dHint: Some lines were ellipsized, use -l to show in full.

3.5.5 部署kube-controller-manager 创建kube-controller-manager配置文件

1 2 vim /cloud/ k8s/kubernetes/ cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS=""

创建kube-controller-manager systemd unit 文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cat > /etc/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] ExecStart=/usr/k8s/bin/kube-controller-manager \\ --logtostderr=true \\ --v=4 \\ --master=${MASTER_URL}:8080 \\ --leader-elect=true \\ --address=127.0.0.1 \\ --service-cluster-ip-range=${SERVICE_CIDR} \\ --cluster-name=kubernetes \\ --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\ --root-ca-file=//etc/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem Restart=on-failure [Install] WantedBy=multi-user.target EOF

启动服务

1 2 3 system ctl daemon-reloadsystem ctl enable kube-controller-managersystem ctl restart kube-controller-manager

查看kube-controller-manager是否运行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 [root@k8s-1 /data/ ssl_config/kubernetes] master root 7759 2930 0 19 :59 pts/0 00 :00 :00 grep --color=auto kube-controller-manager root 13972 1 0 8 月02 ? 00 :10 :56 /cloud/ k8s/kubernetes/ bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.0.0.0/ 24 --cluster-name=kubernetes --cluster-signing-cert-file=/cloud/ k8s/kubernetes/ ssl/ca.pem --cluster-signing-key-file=/ cloud/k8s/ kubernetes/ssl/ ca-key.pem --root-ca-file=/cloud/ k8s/kubernetes/ ssl/ca.pem --service-account-private-key-file=/ cloud/k8s/ kubernetes/ssl/ ca-key.pem [root@k8s-1 /data/ ssl_config/kubernetes] master ● kube-controller-manager.service - Kubernetes Controller Manager Loaded: loaded (/usr/ lib/systemd/ system/kube-controller-manager.service; enabled; vendor preset: disabled) Active: active (running) since 五 2019 -08 -02 18 :33 :13 CST; 1 day 1 h ago Docs: https://gi thub.com/kubernetes/ kubernetes Main PID: 13972 (kube-controller) Tasks: 10 Memory: 51.9 M CGroup: /system.slice/ kube-controller-manager.service └─13972 /cloud/ k8s/kubernetes/ bin/kube-controller-manager --logtos... 8 月 03 19 :59 :48 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :48.522342 ...8 月 03 19 :59 :48 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :48.526381 ...8 月 03 19 :59 :50 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :50.211112 ...8 月 03 19 :59 :50 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :50.455555 ...8 月 03 19 :59 :50 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :50.738397 ...8 月 03 19 :59 :50 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :50.767332 ...8 月 03 19 :59 :51 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :51.502835 ...8 月 03 19 :59 :53 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :53.567923 ...8 月 03 19 :59 :53 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :53.567955 ...8 月 03 19 :59 :54 k8s-1 kube-controller-manager[13972 ]: I0803 19 :59 :54.321341 ...Hint: Some lines were ellipsized, use -l to show in full.

3.5.6 查看master集群状态 1 2 3 4 5 6 7 8 [root@k8s-1 /root] master NAME STATUS MESSAGE ERROR componentstatus/controller-manager Healthy ok componentstatus/scheduler Healthy ok componentstatus/etcd-2 Healthy {"health" :"true" } componentstatus/etcd-1 Healthy {"health" :"true" } componentstatus/etcd-0 Healthy {"health" :"true" }

3.6 部署node 节点

kubernetes work 节点运行如下组件:

3.6.1 部署 kubelet 组件

1 kublet 运行在每个 worker 节点上,接收 kube-apiserver 发送的请求,管理 Pod 容器,执行交互式命令,如exec 、run 、logs 等;

kublet 启动时自动向 kube-apiserver 注册节点信息,内置的 cadvisor 统计和监控节点的资源使用情况;

配置node节点

1 2 3 cd /data/install/kubernetes/server/bin/ scp kubelet kube-proxy k8s-2 :/cloud/k8s/kubernetes/bin/ scp kubelet kube-proxy k8s-3 :/cloud/k8s/kubernetes/bin/

创建 kubelet bootstrap.kubeconfig 文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 cd /data /ssl_config /kubernetes /vim environment .sh BOOTSTRAP_TOKEN =619bd8a5bcfc6bcde109d710d9a02cc4 KUBE_APISERVER ="https://192.168.220.110:6443" source environment .sh kubectl config set-cluster kubernetes \ --certificate-authority=./ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=bootstrap.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

创建 kubelet.kubeconfig 文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 vim envkubelet .kubeconfig .sh BOOTSTRAP_TOKEN =619bd8a5bcfc6bcde109d710d9a02cc4 KUBE_APISERVER ="https://192.168.220.110:6443" kubectl config set-cluster kubernetes \ --certificate-authority=./ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kubelet.kubeconfig kubectl config set-credentials kubelet \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=kubelet.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet \ --kubeconfig=kubelet.kubeconfig kubectl config use-context default --kubeconfig=kubelet.kubeconfig

创建kube-proxy kubeconfig文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 vim env_proxy .sh BOOTSTRAP_TOKEN =619bd8a5bcfc6bcde109d710d9a02cc4 KUBE_APISERVER ="https://192.168.220.110:6443" kubectl config set-cluster kubernetes \ --certificate-authority=./ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=./kube-proxy.pem \ --client-key=./kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

将bootstrap.kubeconfig kube-proxy.kubeconfig 文件拷贝到所有 nodes节点

1 2 scp -rp bootstrap.kubeconfig kube-proxy.kubeconfig k8s-2 :/cloud/k8s/kubernetes/cfg/ scp -rp bootstrap.kubeconfig kube-proxy.kubeconfig k8s-3 :/cloud/k8s/kubernetes/cfg/

3.6.2 创建kubelet 参数配置文件拷贝到所有 nodes节点 创建 kubelet 参数配置模板文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@k8s-2 /root ] node cat > /etc/kubernetes/kubelet.config << EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: ${NODE_IP} port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDomain: cluster.local. failSwapOn: false authentication: anonymous: enabled: true EOF

创建kubelet配置文件

1 2 vim /cloud/ k8s/kubernetes/ cfg/kubelet KUBELET_OPTS=""

创建kubelet systemd unit 文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 cat > /etc/systemd/system/kubelet.service << EOF [Unit] Description =Kubernetes KubeletAfter =docker.serviceRequires =docker.service[Service] ExecStart =/usr/k8s/bin/kubelet \\--logtostderr =true \\--v =4 \\--hostname-override =${NODE_IP} \\--kubeconfig =/etc/kubernetes/kubelet.kubeconfig \\--bootstrap-kubeconfig =/etc/kubernetes/bootstrap.kubeconfig \\--config =/etc/kubernetes/kubelet.config \\--cert-dir =/etc/kubernetes/ssl \\--pod-infra-container-image =registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0Restart =on-failureKillMode =process[Install] WantedBy =multi-user.targetEOF

将kubelet-bootstrap用户绑定到系统集群角色

1 2 3 kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole =system:node-bootstrapper \ --user =kubelet-bootstrap

3.6.3 启动kubelet服务 1 2 3 system ctl daemon-reloadsystem ctl enable kubeletsystem ctl restart kubelet

3.6.4 approve kubelet CSR 请求 1 2 3 4 kubectl get csr kubectl certificate approve $NAME kubectl get csr csr 状态变为 Approved,Issued 即可

Requesting User:请求 CSR 的用户,kube-apiserver 对它进行认证和授权;

Subject:请求签名的证书信息;

证书的 CN 是 system:node:kube-node2, Organization 是 system:nodes,kube-apiserver 的 Node 授权模式会授予该证书的相关权限;

3.6.5 查看集群状态 1 2 3 4 5 6 7 8 9 10 11 12 [root@k8s-1 /root] master # kubectl get nodes,csNAME STATUS ROLES AGE VERSION node /k8s-2 Ready node 3d22h v1.15.1 node /k8s-3 Ready node 3d22h v1.15.1 NAME STATUS MESSAGE ERROR componentstatus/scheduler Healthy ok componentstatus/controller-manager Healthy ok componentstatus/etcd-0 Healthy {"health" :"true" } componentstatus/etcd-2 Healthy {"health" :"true" } componentstatus/etcd-1 Healthy {"health" :"true" }

3.7 部署 node kube-proxy 组件

kube-proxy 运行在所有 node节点上,它监听 apiserver 中 service 和 Endpoint 的变化情况,创建路由规则来进行服务负载均衡。

3.7.1 创建kube-proxy配置文件 1 2 3 4 5 6 vim /cloud/ k8s/kubernetes/ cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=k8s-2 \ --cluster-cidr=10.0.0.0/24 \ --kubeconfig=/cloud/k8s/kubernetes/cfg/kube-proxy.kubeconfig"

3.7.2 创建kube-proxy systemd unit 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 cat > /etc/systemd/system/kube-proxy.service << EOF [Unit] Description =Kubernetes Proxy After =network.target[Service] WorkingDirectory =/var/lib/kube-proxyExecStart =/usr/k8s/bin/kube-proxy \\--logtostderr =true \\--v =4 \\--hostname-override =${NODE_IP} \\--cluster-cidr =${SERVICE_CIDR} \\--kubeconfig =/etc/kubernetes/kube-proxy.kubeconfigRestart =on-failure[Install] WantedBy =multi-user.targetEOF

3.7.3 启动kube-proxy服务 1 2 3 system ctl daemon-reloadsystem ctl enable kube-proxysystem ctl restart kube-proxy

3.7.4 检查服务运行状态 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 [root@k8s-2 /root] node # systemctl status kube-proxy ● kube-proxy.service - Kubernetes Proxy Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service Active: active (running) since 二 2019-07-30 22 :30 :04 CST Main PID: 5764 (kube-proxy) Tasks: 0 Memory: 11 .8 M CGroup: /system.slice/kube-proxy.service ‣ 5764 /cloud/k8s/kubernetes/bin/kube-proxy --logtostderr=true --v... 8 月 03 20:51:33 k8s-2 kube-proxy[5764 ]: I0803 20:51 :33.736946 5764 iptab...]8 月 03 20:51:33 k8s-2 kube-proxy[5764 ]: I0803 20:51 :33.740738 5764 healt..."8 月 03 20:51:33 k8s-2 kube-proxy[5764 ]: I0803 20:51 :33.740762 5764 proxi...s8 月 03 20:51:33 k8s-2 kube-proxy[5764 ]: I0803 20:51 :33.740780 5764 bound...s8 月 03 20:51:34 k8s-2 kube-proxy[5764 ]: I0803 20:51 :34.018700 5764 confi...e8 月 03 20:51:34 k8s-2 kube-proxy[5764 ]: I0803 20:51 :34.028396 5764 confi...e8 月 03 20:51:36 k8s-2 kube-proxy[5764 ]: I0803 20:51 :36.035812 5764 confi...e8 月 03 20:51:36 k8s-2 kube-proxy[5764 ]: I0803 20:51 :36.046563 5764 confi...e8 月 03 20:51:38 k8s-2 kube-proxy[5764 ]: I0803 20:51 :38.045395 5764 confi...e8 月 03 20:51:38 k8s-2 kube-proxy[5764 ]: I0803 20:51 :38.061197 5764 confi...eHint: Some lines were ellipsized, use -l to show in full.

至此 kubernetes 1.15 版本简单部署完成~