本文档介绍使用二进制部署 kubernetes v1.16.6 集群的所有步骤(Hard-Way模式)

容器运行时:用 containerd 替换 docker,更加简单、健壮;相应的命令行工具为crictl;

Pod 网络:用 calico 替换 flannel 实现 Pod 互通,支持更大规模的集群;新增指标监控系统:使用主流的 Prometheus、Grafana 技术栈实现集群指标采集和监控;如果想继续使用 docker 和 flannel,请参考附件文档。

组件版本和配置策略 主要组件版本

组件

版本

kubernetes

1.16.6

etcd

3.4.3

containerd

1.3.3

runc

1.0.0-rc10

calico

3.12.0

coredns

1.6.6

dashboard

v2.0.0-rc4

k8s-prometheus-adapter

0.5.0

prometheus-operator

0.35.0

prometheus

2.15.2

elasticsearch、kibana

7.2.0

cni-plugins

0.8.5

metrics-server

0.3.6

主要配置策略 kube-apiserver:

使用节点本地 nginx 4 层透明代理实现高可用;

关闭非安全端口 8080 和匿名访问;

在安全端口 6443 接收 https 请求;

严格的认证和授权策略 (x509、token、RBAC);

开启 bootstrap token 认证,支持 kubelet TLS bootstrapping;

使用 https 访问 kubelet、etcd,加密通信;

kube-controller-manager: 3 节点高可用;

关闭非安全端口,在安全端口 10252 接收 https 请求;

使用 kubeconfig 访问 apiserver 的安全端口;

自动 approve kubelet 证书签名请求 (CSR),证书过期后自动轮转;

各 controller 使用自己的 ServiceAccount 访问 apiserver;

kube-scheduler:

3 节点高可用;

使用 kubeconfig 访问 apiserver 的安全端口;

kubelet: 使用 kubeadm 动态创建 bootstrap token,而不是在 apiserver 中静态配置;

使用 TLS bootstrap 机制自动生成 client 和 server 证书,过期后自动轮转;

在 KubeletConfiguration 类型的 JSON 文件配置主要参数;

关闭只读端口,在安全端口 10250 接收 https 请求,对请求进行认证和授权,拒绝

匿名访问和非授权访问;

使用 kubeconfig 访问 apiserver 的安全端口;

kube-proxy: 使用 kubeconfig 访问 apiserver 的安全端口;

在 KubeProxyConfiguration 类型的 JSON 文件配置主要参数;

使用 ipvs 代理模式;

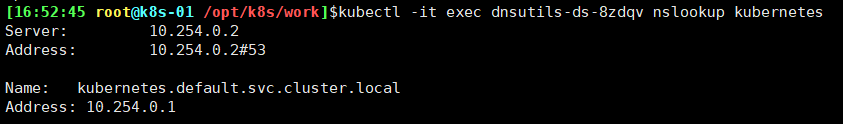

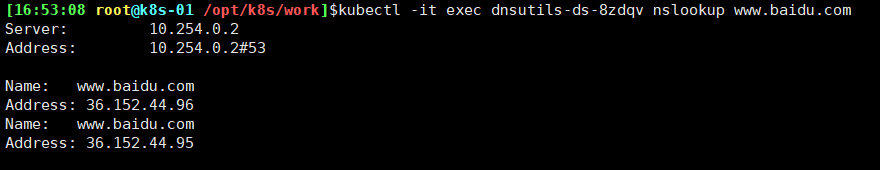

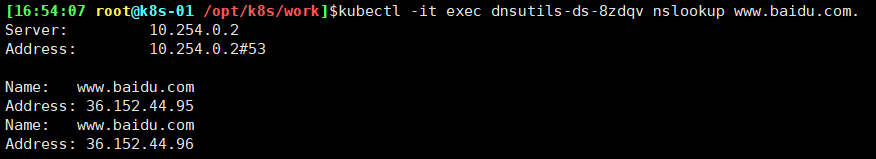

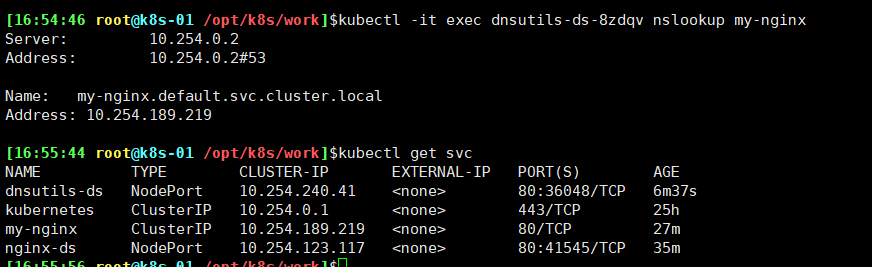

集群插件: DNS:使用功能、性能更好的 coredns;

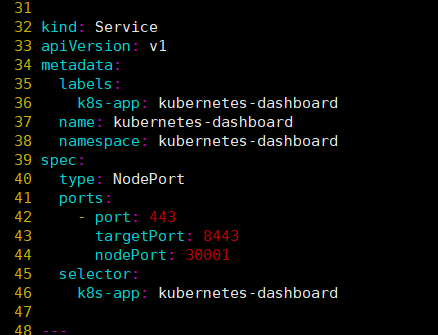

Dashboard:支持登录认证;

Metric:metrics-server,使用 https 访问 kubelet 安全端口;

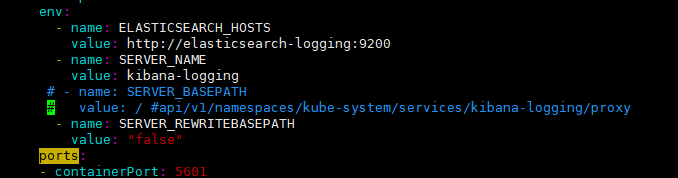

Log:Elasticsearch、Fluend、Kibana;

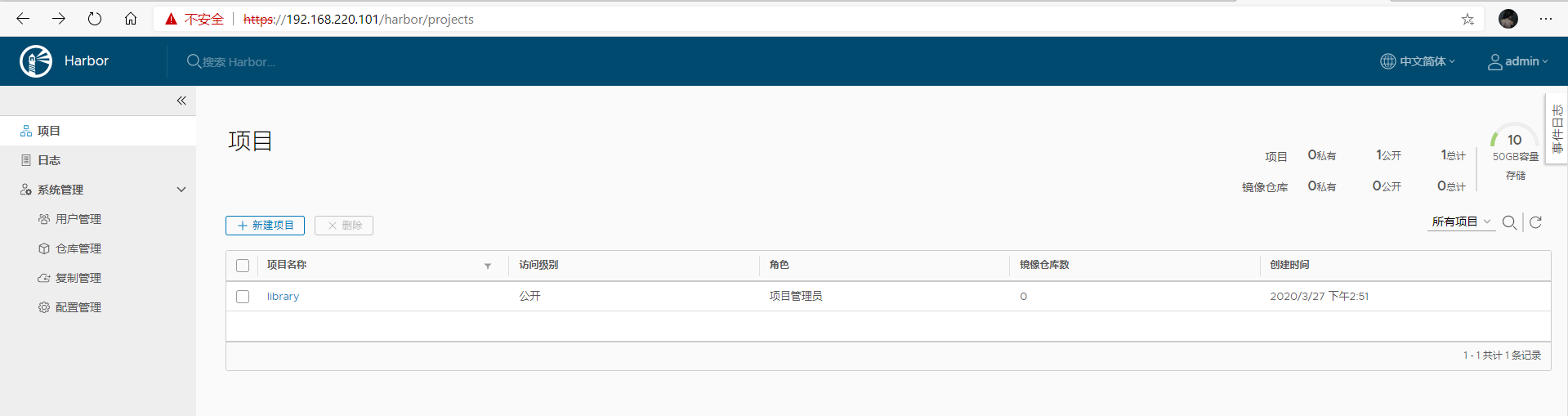

Registry 镜像库:docker-registry、harbor;

初始化系统和全局变量 集群规划

k8s-01:192.168.220.100

k8s-02:192.168.220.101

k8s-03:192.168.220.102

三台机器混合部署本文档的 etcd、master 集群和 woker 集群。

如果没有特殊说明,需要在所有节点上执行本文档的初始化操作。

设置主机名 1 hostnamectl set-hostname k8s-01 # 将 k8s-01 替换为当前主机名

如果 DNS 不支持主机名称解析,还需要在每台机器的 /etc/hosts 文件中添加主机名和 IP 的对应关系:

1 2 3 4 5 cat >> /etc/hosts <<EOF 192.168.220.100 k8s-01 192.168.220.101 k8s-02 192.168.220.102 k8s-03 EOF

退出,重新登录 root 账号,可以看到主机名生效。

添加节点信任关系 本操作只需要在k8s-01 节点上进行,设置 root 账户可以无密码登录所有节点:

1 2 3 4 ssh-keygen -t rsa ssh-copy-id root@k8s-01 ssh-copy-id root@k8s-02 ssh-copy-id root@k8s-03

更新 PATH 变量 1 2 echo 'PATH=/opt/k8s/bin:$PATH' >>/root/.bashrc source /root/.bashrc

/opt/k8s/bin 目录保存本文档下载安装的程序;

安装依赖包 1 2 3 4 5 6 7 8 9 10 11 yum install -y epel-release yum install -y chrony conntrack ipvsadm ipset jq curl sysstat libseccomp wget socat git yum install -y net-tools vim iotop bc zip \ unzip lrzsz tree ntpdate telnet lsof iostat \ tcpdump wget traceroute bc net-tools \ bash-completion yum -y install bridge-utils chrony ipvsadm ipset sysstat conntrack \ libseccomp wget tcpdump screen vim nfs-utils bind-utils wget socat \ telnet sshpass net-tools sysstat lrzsz yum-utils device-mapper-persistent-data \ lvm2 tree nc lsof strace nmon iptraf iftop rpcbind mlocate ipvsadm

本文档的 kube-proxy 使用 ipvs 模式,ipvsadm 为 ipvs 的管理工具;

etcd 集群各机器需要时间同步,chrony 用于系统时间同步;

关闭防火墙,selinux、swap、NetworkManager 关闭防火墙,清理防火墙规则,设置默认转发策略:

1 2 3 4 5 6 7 8 9 10 systemctl stop firewalld systemctl disable firewalld iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat iptables -P FORWARD ACCEPT swapoff -a sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab setenforce 0 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config systemctl stop NetworkManager systemctl disable NetworkManager

优化内核参数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cat > kubernetes.conf <<EOF net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_forward=1 net.ipv4.tcp_tw_recycle=0 net.ipv4.neigh.default.gc_thresh1=1024 net.ipv4.neigh.default.gc_thresh1=2048 net.ipv4.neigh.default.gc_thresh1=4096 vm.swappiness=0 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_instances=8192 fs.inotify.max_user_watches=1048576 fs.file-max=52706963 fs.nr_open=52706963 net.ipv6.conf.all.disable_ipv6=1 net.netfilter.nf_conntrack_max=2310720 EOF mv kubernetes.conf /etc/sysctl.d/kubernetes.conf sysctl -p /etc/sysctl.d/kubernetes.conf

关闭 tcp_tw_recycle,否则与 NAT 冲突,可能导致服务不通;

修改资源限制 1 2 3 4 5 6 echo "* soft nofile 655360" >> /etc/security/limits.confecho "* hard nofile 655360" >> /etc/security/limits.confecho "* soft nproc 655360" >> /etc/security/limits.confecho "* hard nproc 655360" >> /etc/security/limits.confecho "* soft memlock unlimited" >> /etc/security/limits.confecho "* hard memlock unlimited" >> /etc/security/limits.conf

设置系统时区 1 timedatectl set-timezone Asia/Shanghai

设置系统时钟同步 1 2 systemctl enable chronyd systemctl start chronyd

查看同步状态: 输出: 1 2 3 4 5 6 7 8 Local time: 二 2020-03-17 11:25:12 CST Universal time: 二 2020-03-17 03:25:12 UTC RTC time: 二 2020-03-17 03:25:12 Time zone: Asia/Shanghai (CST, +0800) NTP enabled: yes NTP synchronized: yes RTC in local TZ: no DST active: n/a

System clock synchronized: yes ,表示时钟已同步;

NTP service: active ,表示开启了时钟同步服务;

1 2 3 4 5 # 将当前的 UTC 时间写入硬件时钟 timedatectl set-local-rtc 0 # 重启依赖于系统时间的服务 systemctl restart rsyslog systemctl restart crond

关闭无关的服务 1 2 systemctl stop postfix && systemctl disable postfix systemctl stop dnsmasq && systemctl disable dnsmasq # 关闭dnsmasq(否则可能导致docker容器无法解析域名)

创建相关目录 创建目录: 1 mkdir -p /opt/k8s/{bin,work} /etc/{kubernetes,etcd}/cert

创建环境变量文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 vim environment.sh ENCRYPTION_KEY="f3be89d17cf7d80847f3bc8b8ea58250" NODE_NAMES=("k8s-01" "k8s-02" "k8s-03") NODE_IPS=("192.168.220.100" "192.168.220.101" "192.168.220.102") ETCD_NAMES=("etcd-01" "etcd-02" "etcd-03") # 当前部署的机器名称(随便定义,只要能区分不同机器即可) ETCD_IPS=("192.168.220.100" "192.168.220.101" "192.168.220.102") ETCD_DATA_DIR="/opt/k8s/work/etcd/" # etcd工作目录 ETCD_WAL_DIR="/opt/k8s/work/etcd-higher/" # etcd工作目录(固态盘) FLANNEL_ETCD_PREFIX="/kubernetes/network" MASTER_URL="k8s-api.virtual.local" # MASTER API Server 地址 KUBE_APISERVER="https://${MASTER_URL}:6443" #变量KUBE_APISERVER 指定kubelet 访问的kube-apiserver 的地址,后续被写入~/.kube/config配置文件 K8S_DIR="/opt/k8s/work" #k8s-工作目录 SERVICE_CIDR="10.254.0.0/16" # 指定 Service Cluster IP 地址段,该地址段不能路由可达 CLUSTER_CIDR="172.30.0.0/16" # Pod 网段(Cluster CIDR),部署前路由不可达,部署后路由可达 CLUSTER_KUBERNETES_SVC_IP="10.254.0.1" # kubernetes 服务IP(预先分配,一般为SERVICE_CIDR中的第一个IP) CLUSTER_DNS_SVC_IP="10.254.0.2" # 集群 DNS 服务IP(从SERVICE_CIDR 中预先分配) CLUSTER_DNS_DOMAIN="cluster.local." # 集群 DNS 域名 NODE_PORT_RANGE=30000-50000 # 指定 NodePort 的端口范围 ETCD_ENDPOINTS="https://192.168.220.100:2379,https://192.168.220.101:2379,https://192.168.220.102:2379" # etcd集群服务地址列表 ETCD_NODES=etcd-01=https://192.168.220.100:2380,etcd-02=https://192.168.220.101:2380,etcd-03=https://192.168.220.102:2380 CONTAINERD_DIR="/opt/k8s/containerd"

分发集群配置参数脚本 后续使用的环境变量都定义在文件 environment.sh 中,请根据自己的机器、网络情况修

1 2 3 4 5 6 7 source environment.sh # 先修改 for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp environment.sh root@${node_ip}:/opt/k8s/bin/ ssh root@${node_ip} "chmod +x /opt/k8s/bin/*" done

升级内核 CentOS 7.x 系统自带的 3.10.x 内核存在一些 Bugs,导致运行的 Docker、Kubernetes不稳定,例如:

高版本的 docker(1.13 以后) 启用了 3.10 kernel 实验支持的 kernel memoryaccount 功能(无法关闭),当节点压力大如频繁启动和停止容器时会导致 cgroupmemory leak;

网络设备引用计数泄漏,会导致类似于报错:”kernel:unregister_netdevice: waitingfor eth0 to become free. Usage count = 1”;

解决方案如下:

升级内核到 4.4.X 以上;

或者,手动编译内核,disable CONFIG_MEMCG_KMEM 特性;

或者,安装修复了该问题的 Docker 18.09.1 及以上的版本。但由于 kubelet 也会设置 kmem(它 vendor 了runc),所以需要重新编译 kubelet 并指定 GOFLAGS=”-tags=nokmem”;

1 2 3 git clone --branch v1.14.1 --single-branch --depth 1 https://github.com/kubernetes/kubernetes cd kubernetes KUBE_GIT_VERSION=v1.14.1 ./build/run.sh make kubelet GOFLAGS="-tags=nokmem"

这里采用升级内核的解决办法: centos7.7 当前版本

在yum的elrepo源中有ml和lt两种内核,其中ml(mainline)为最新版本的内核。lt为长期支持的内核。

1 2 [11:28:38 root@k8s-01 ~]$uname -a Linux k8s-01 3.10.0-1062.el7.x86_64

1 2 3 4 5 6 7 8 9 10 rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm # 安装完成后检查 /boot/grub2/grub.cfg 中对应内核 menuentry 中是否包含 initrd16 配置,如果没有,再安装一次! yum --enablerepo=elrepo-kernel install -y kernel-lt # 查看系统上的所有可用内核: [11:31:12 root@k8s-03 ~]$ sudo awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg 0 : CentOS Linux (4.4.216-1.el7.elrepo.x86_64) 7 (Core) 1 : CentOS Linux (3.10.0-1062.el7.x86_64) 7 (Core) 2 : CentOS Linux (0-rescue-a695b6c8dd0e412daaa39cdecc50dd60) 7 (Core) # 设置开机从新内核启动 grub2-set-default 0

安装 ml版本

1 yum --enablerepo= "elrepo-kernel" -y install kernel-ml.x86_64

重启机器: 查看版本 1 2 [11:36:26 root@k8s-01 ~]$uname -a Linux k8s-01 4.4.216-1.el7.elrepo.x86_64

参考

创建 CA 根证书和秘钥

为确保安全, kubernetes 系统各组件需要使用 x509 证书对通信进行加密和认证。

CA (Certificate Authority) 是自签名的根证书,用来签名后续创建的其它证书。

CA 证书是集群所有节点共享的,只需要创建一次,后续用它签名其它所有证书。

本文档使用 CloudFlare 的 PKI 工具集 cfssl 创建所有证书。

注意:如果没有特殊指明,本文档的所有操作均在 k8s-01 节点上执行。

安装 cfssl 工具集 1 2 3 4 5 6 7 8 9 10 11 12 sudo mkdir -p /opt/k8s/{cert,work} && cd /opt/k8s/work wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssl_1.4.1_linux_amd64 mv cfssl_1.4.1_linux_amd64 /opt/k8s/bin/cfssl wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssljson_1.4.1_linux_amd64 mv cfssljson_1.4.1_linux_amd64 /opt/k8s/bin/cfssljson wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssl-certinfo_1.4.1_linux_amd64 mv cfssl-certinfo_1.4.1_linux_amd64 /opt/k8s/bin/cfssl-certinfo chmod +x /opt/k8s/bin/* export PATH=/opt/k8s/bin:$PATH

创建配置文件 CA 配置文件用于配置根证书的使用场景 (profile) 和具体参数 (usage,过期时间、服务端认证、客户端认证、加密等):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 cat > ca-config.json << EOF { "signing": { "default": { "expiry": "876000h" }, "profiles": { "kubernetes": { "usages": ["signing", "keyencipherment", "serverauth", "clientauth"], "expiry": "876000h" } } } } EOF

signing :表示该证书可用于签名其它证书(生成的 ca.pem 证书中CA=TRUE );

server auth :表示 client 可以用该该证书对 server 提供的证书进行验证;

client auth :表示 server 可以用该该证书对 client 提供的证书进行验证;

“expiry”: “876000h” :证书有效期设置为 100 年;

创建证书签名请求文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cd /opt/k8s/work cat > ca-csr.json << EOF { "CN": "kubernetes-ca", "key": { "algo": "rsa", "size": 2048 }, "names": [{ "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "opsnull" }], "ca": { "expiry": "876000h" } } EOF

CN:Common Name :kube-apiserver 从证书中提取该字段作为请求的用户名(User Name),浏览器使用该字段验证网站是否合法;

O:Organization :kube-apiserver 从证书中提取该字段作为请求用户所属的组(Group);

kube-apiserver 将提取的 User、Group 作为 RBAC 授权的用户标识;

注意:

不同证书 csr 文件的 CN、C、ST、L、O、OU 组合必须不同,否则可能出现PEER’S CERTIFICATE HAS AN INVALID SIGNATURE 错误;

后续创建证书的 csr 文件时,CN 都不相同(C、ST、L、O、OU 相同),以达到区分的目的;

生成 CA 证书和私钥 1 2 3 cd /opt/k8s/work cfssl gencert -initca ca-csr.json | cfssljson -bare ca ls ca*

分发证书文件 1 2 3 4 5 6 7 8 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert" scp ca*.pem ca-config.json root@${node_ip}:/etc/kubernetes/cert done

安装和配置 kubectl 本文档介绍安装和配置 kubernetes 命令行管理工具 kubectl 的步骤。

如果没有特殊指明,本文档的所有操作均在k8s-01 节点上执行;

本文档只需要部署一次,生成的 kubeconfig 文件是通用的,可以拷贝到需要执行kubectl 命令的机器的 ~/.kube/config 位置;

下载和分发 kubectl 二进制文件 1 2 3 4 cd /opt/k8s/work wget https://dl.k8s.io/v1.16.6/kubernetes-client-linux-amd64.tar.gz #自行解决翻墙下载问题 tar -xzvf kubernetes-client-linux-amd64.tar.gz mv kubernetes/client/bin/kubectl /opt/k8s/bin/

分发到所有使用 kubectl 工具的节点: 1 2 3 4 5 6 7 8 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp kubernetes/client/bin/kubectl root@${node_ip}:/opt/k8s/bin/ ssh root@${node_ip} "chmod +x /opt/k8s/bin/*" done

创建 admin 证书和私钥 kubectl 使用 https 协议与 kube-apiserver 进行安全通信,kube-apiserver 对 kubectl 请求包含的证书进行认证和授权。

创建证书签名请求: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cd /opt/k8s/work cat > admin -csr.json << EOF { "CN":"admin", "hosts":[], "key":{ "algo":"rsa", "size":2048 }, "names":[ { "C":"CN", "ST":"BeiJing", "L":"BeiJing", "O":"system:masters", "OU":"opsnull" } ] } EOF

O: system:masters :kube-apiserver 收到使用该证书的客户端请求后,为请求添加组(Group)认证标识 system:masters ;

预定义的 ClusterRoleBinding cluster-admin 将 Group system:masters 与Role cluster-admin 绑定,该 Role 授予操作集群所需的最高权限;

该证书只会被 kubectl 当做 client 证书使用,所以 hosts 字段为空;

生成证书和私钥: 1 2 3 4 5 6 cd /opt/k8s/work cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes admin-csr.json | cfssljson -bare admin ls admin*

忽略警告消息 [WARNING] This certificate lacks a “hosts” field. ;

创建 kubeconfig 文件 kubectl 使用 kubeconfig 文件访问 apiserver,该文件包含 kube-apiserver 的地址和认证信息(CA 证书和客户端证书):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 cd /opt/k8s/work source /opt/k8s/bin/environment.sh # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/work/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kubectl.kubeconfig # 设置客户端认证参数 kubectl config set-credentials admin \ --client-certificate=/opt/k8s/work/admin.pem \ --client-key=/opt/k8s/work/admin-key.pem \ --embed-certs=true \ --kubeconfig=kubectl.kubeconfig # 设置上下文参数 kubectl config set-context kubernetes \ --cluster=kubernetes \ --user=admin \ --kubeconfig=kubectl.kubeconfig # 设置默认上下文 kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig

–certificate-authority :验证 kube-apiserver 证书的根证书;

–client-certificate 、 –client-key :刚生成的 admin 证书和私钥,与kube-apiserver https 通信时使用;

–embed-certs=true :将 ca.pem 和 admin.pem 证书内容嵌入到生成的kubectl.kubeconfig 文件中(否则,写入的是证书文件路径,后续拷贝 kubeconfig 到其它机器时,还需要单独拷贝证书文件,不方便。);

–server :指定 kube-apiserver 的地址,这里指向第一个节点上的服务;

分发 kubeconfig 文件 分发到所有使用 kubectl 命令的节点: 1 2 3 4 5 6 7 8 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p ~/.kube" scp kubectl.kubeconfig root@${node_ip}:~/.kube/config done

部署 etcd 集群 etcd 是基于 Raft 的分布式 KV 存储系统,由 CoreOS 开发,常用于服务发现、共享配置以及并发控制(如 leader 选举、分布式锁等)。

kubernetes 使用 etcd 集群持久化存储所有 API 对象、运行数据。

本文档介绍部署一个三节点高可用 etcd 集群的步骤:

下载和分发 etcd 二进制文件;

创建 etcd 集群各节点的 x509 证书,用于加密客户端(如 etcdctl) 与 etcd 集群、etcd 集群之间的通信;

创建 etcd 的 systemd unit 文件,配置服务参数;

检查集群工作状态;

etcd 集群节点名称和 IP 如下:

1 2 3 k8s -01 :192.168.220.100 k8s -02 :192.168.220.101 k8s -03 :192.168.220.102

注意:

如果没有特殊指明,本文档的所有操作均在k8s-01 节点上执行;

flanneld 与本文档安装的 etcd v3.4.x 不兼容,如果要安装 flanneld(本文档使用calio),则需要将 etcd 降级到 v3.3.x 版本;

下载和分发 etcd 二进制文件 到 etcd 的 release 页面 下载最新版本的发布包: 1 2 3 cd /opt/k8s/work wget https://github.com/coreos/etcd/releases/download/v3.4.3/etcd-v3.4.3-linux-amd64.tar.gz tar -xvf etcd-v3.4.3-linux-amd64.tar.gz

分发二进制文件到集群所有节点: 1 2 3 4 5 6 7 8 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp etcd-v3.4.3-linux-amd64/etcd* root@${node_ip}:/opt/k8s/bin ssh root@${node_ip} "chmod +x /opt/k8s/bin/*" done

创建 etcd 证书和私钥 创建证书签名请求: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cd /opt/k8s/work cat > etcd-csr.json << EOF { "CN" : "etcd" , "hosts" : [ "127.0.0.1" , "192.168.220.100" , "192.168.220.101" , "192.168.220.102" ] , "key" : { "algo" : "rsa" , "size" : 2048 } , "names" : [ { "C" : "CN" , "ST" : "BeiJing" , "L" : "BeiJing" , "O" : "k8s" , "OU" : "opsnull" } ] } EOF

hosts :指定授权使用该证书的 etcd 节点 IP 列表,需要将 etcd 集群所有节点 IP都列在其中;

生成证书和私钥: 1 2 3 4 5 6 cd /opt/k8s/work cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes etcd-csr.json | cfssljson -bare etcd ls etcd*pem

分发生成的证书和私钥到各 etcd 节点: 1 2 3 4 5 6 7 8 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p /etc/etcd/cert" scp etcd*.pem root@${node_ip}:/etc/etcd/cert/ done

创建 etcd 的 systemd unit 模板文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > etcd.service.template <<EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=${ETCD_DATA_DIR} ExecStart=/opt/k8s/bin/etcd \\ --data-dir=${ETCD_DATA_DIR} \\ --wal-dir=${ETCD_WAL_DIR} \\ --name=##NODE_NAME## \\ --cert-file=/etc/etcd/cert/etcd.pem \\ --key-file=/etc/etcd/cert/etcd-key.pem \\ --trusted-ca-file=/etc/kubernetes/cert/ca.pem \\ --peer-cert-file=/etc/etcd/cert/etcd.pem \\ --peer-key-file=/etc/etcd/cert/etcd-key.pem \\ --peer-trusted-ca-file=/etc/kubernetes/cert/ca.pem \\ --peer-client-cert-auth \\ --client-cert-auth \\ --listen-peer-urls=https://##NODE_IP##:2380 \\ --initial-advertise-peer-urls=https://##NODE_IP##:2380 \\ --listen-client-urls=https://##NODE_IP##:2379,http://127.0.0.1:2379 \\ --advertise-client-urls=https://##NODE_IP##:2379 \\ --initial-cluster-token=etcd-cluster-0 \\ --initial-cluster=${ETCD_NODES} \\ --initial-cluster-state=new \\ --auto-compaction-mode=periodic \\ --auto-compaction-retention=1 \\ --max-request-bytes=33554432 \\ --quota-backend-bytes=6442450944 \\ --heartbeat-interval=250 \\ --election-timeout=2000 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

WorkingDirectory 、 –data-dir :指定工作目录和数据目录为${ETCD_DATA_DIR} ,需在启动服务前创建这个目录;

–wal-dir :指定 wal 目录,为了提高性能,一般使用 SSD 或者和 –data-dir 不同的磁盘;

–name :指定节点名称,当 –initial-cluster-state 值为 new 时, –name 的参数值必须位于 –initial-cluster 列表中;

–cert-file 、 –key-file :etcd server 与 client 通信时使用的证书和私钥;

–trusted-ca-file :签名 client 证书的 CA 证书,用于验证 client 证书;

–peer-cert-file 、 –peer-key-file :etcd 与 peer 通信使用的证书和私钥;

–peer-trusted-ca-file :签名 peer 证书的 CA 证书,用于验证 peer 证书;

为各节点创建和分发 etcd systemd unit 文件 替换模板文件中的变量,为各节点创建 systemd unit 文件:

1 2 3 4 5 6 7 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for (( i=0; i < 3; i++ )) do sed -e "s/##NODE_NAME##/${ETCD_NAMES[i]}/" -e "s/##NODE_IP##/${ETCD_IPS[i]}/" etcd.service.template > etcd-${NODE_IPS[i]}.service done ls *.service

NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发生成的 systemd unit 文件:

1 2 3 4 5 6 7 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp etcd-${node_ip}.service root@${node_ip}:/etc/systemd/system/etcd.service done

启动 etcd 服务 1 2 3 4 5 6 7 8 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p ${ETCD_DATA_DIR} ${ETCD_WAL_DIR}" ssh root@${node_ip} "systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd &" done

必须先创建 etcd 数据目录和工作目录;

etcd 进程首次启动时会等待其它节点的 etcd 加入集群,命令 systemctl startetcd 会卡住一段时间,为正常现象;

检查启动结果 1 2 3 4 5 6 7 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "systemctl status etcd|grep Active" done

确保状态为 active (running) ,否则查看日志,确认原因:

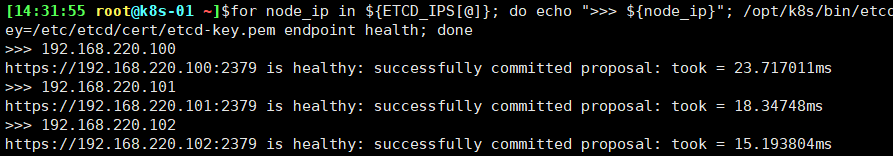

验证服务状态 部署完 etcd 集群后,在任一 etcd 节点上执行如下命令:

1 2 3 4 5 6 7 8 9 10 11 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${ETCD_IPS[@]} do echo ">>> ${node_ip}" /opt/k8s/bin/etcdctl \ --endpoints=https://${node_ip}:2379 \ --cacert=/etc/kubernetes/cert/ca.pem \ --cert=/etc/etcd/cert/etcd.pem \ --key=/etc/etcd/cert/etcd-key.pem endpoint health done

3.4.3 版本的 etcd/etcdctl 默认启用了 V3 API,所以执行 etcdctl 命令时不需要再指定环境变量 ETCDCTL_API=3 ;

从 K8S 1.13 开始,不再支持 v2 版本的 etcd;

预期输出:

输出均为 healthy 时表示集群服务正常。

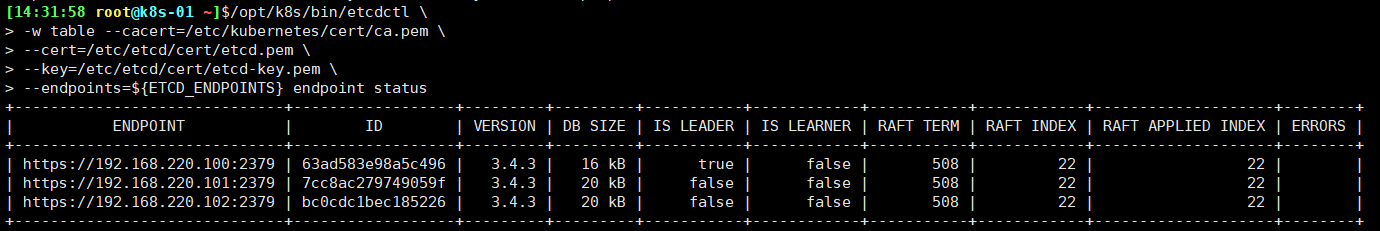

查看当前的 leader 1 2 3 4 5 6 source /opt/k8s/bin/environment.sh /opt/k8s/bin/etcdctl \ -w table --cacert=/etc/kubernetes/cert/ca.pem \ --cert=/etc/etcd/cert/etcd.pem \ --key=/etc/etcd/cert/etcd-key.pem \ --endpoints=${ETCD_ENDPOINTS} endpoint status

输出:

可见,当前的 leader 为192.168.220.100。

部署 master 节点 kubernetes master 节点运行如下组件:

kube-apiserver

kube-scheduler

kube-controller-manager

kube-apiserver、kube-scheduler 和 kube-controller-manager 均以多实例模式运行:

kube-scheduler 和 kube-controller-manager 会自动选举产生一个 leader 实例,其它实例处于阻塞模式,当 leader 挂了后,重新选举产生新的 leader,从而保证服务可用性;

kube-apiserver 是无状态的,可以通过 kube-nginx 进行代理访问(见06-2.apiserver高可用),从而保证服务可用性;

下载最新版本二进制文件 下载二进制 tar 文件并解压: 1 2 3 4 5 cd /opt/k8s/work wget https://dl.k8s.io/v1.16.6/kubernetes-server-linux-amd64.tar.gz -O kubernetes-server-linux-amd64.1.16.6.tar.gz# 自行解决翻墙问题 tar -xzvf kubernetes-server-linux-amd64.1.16.6.tar.gz cd kubernetes tar -xzvf kubernetes-src.tar.gz

将二进制文件拷贝到所有 master 节点: 1 2 3 4 5 6 7 8 9 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp kubernetes/server/bin/{apiextensions-apiserver,kube-apiserver ,kube-controller-manager,kube-proxy,kube-scheduler,kubeadm,kubectl,kubelet,mounter} root@${node_ip}:/opt/k8s/bin/ ssh root@${node_ip} "chmod +x /opt/k8s/bin/*" done

部署 kube-apiserver 集群 本文档讲解部署一个三实例 kube-apiserver 集群的步骤. 可以只部署一个master

创建 kubernetes-master 证书和私钥 创建证书签名请求: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 cd /opt/k8s/work source /opt/k8s/bin/evironment.sh cat > kubernetes-csr.json << EOF { "CN" : "kubernetes-master" , "hosts" : [ "127.0.0.1" , "192.168.220.100" , "192.168.220.101" , "192.168.220.102" , "k8s-api.virtual.local" , "${CLUSTER_KUBERNETES_SVC_IP}" , "kubernetes" , "kubernetes.default" , "kubernetes.default.svc" , "kubernetes.default.svc.cluster" , "kubernetes.default.svc.cluster.local." , "kubernetes.default.svc.${CLUSTER_DNS_DOMAIN}." ] , "key" : { "algo" : "rsa" , "size" : 2048 } , "names" : [ { "C" : "CN" , "ST" : "BeiJing" , "L" : "BeiJing" , "O" : "k8s" , "OU" : "opsnull" } ] } EOF

hosts 字段指定授权使用该证书的 IP 和域名列表,这里列出了 master 节点 IP、kubernetes 服务的 IP 和域名;

生成证书和私钥:

1 2 3 4 5 6 cfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes ls kubernetes*pem kubernetes-key.pem kubernetes.pem

将生成的证书和私钥文件拷贝到所有 master 节点:

1 2 3 4 5 6 7 8 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]}scp do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert" scp kubernetes*.pem root@${node_ip}:/etc/kubernetes/cert/ done

创建加密配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 cd /opt/k8s/work source /opt/k8s/bin/environment.sh cat > encryption-config.yaml <<EOF kind: EncryptionConfig apiVersion: v1 resources: - resources: - secrets providers: - aescbc: keys: - name: key1 secret: ${ENCRYPTION_KEY} - identity: {} EOF

将加密配置文件拷贝到 master 节点的 /etc/kubernetes 目录下: 1 2 3 4 5 6 7 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp encryption-config.yaml root@${node_ip}:/etc/kubernetes/ done

创建审计策略文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 cd /opt/k8s/work source /opt/k8s/bin/environment.sh cat > audit-policy.yaml <<EOF apiVersion: audit.k8s.io/v1beta1 kind: Policy rules: - level: None resources: - group: "" resources: - endpoints - services - services/status users: - 'system:kube-proxy' verbs: - watch - level: None resources: - group: "" resources: - nodes - nodes/status userGroups: - 'system:nodes' verbs: - get - level: None namespaces: - kube-system resources: - group: "" resources: - endpoints users: - 'system:kube-controller-manager' - 'system:kube-scheduler' - 'system:serviceaccount:kube-system:endpoint-controller' verbs: - get - update - level: None resources: - group: "" resources: - namespaces - namespaces/status - namespaces/finalize users: - 'system:apiserver' verbs: - get - level: None resources: - group: metrics.k8s.io users: - 'system:kube-controller-manager' verbs: - get - list - level: None nonResourceURLs: - '/healthz*' - /version - '/swagger*' - level: None resources: - group: "" resources: - events - level: Request omitStages: - RequestReceived resources: - group: "" resources: - nodes/status - pods/status users: - kubelet - 'system:node-problem-detector' - 'system:serviceaccount:kube-system:node-problem-detector' verbs: - update - patch - level: Request omitStages: - RequestReceived resources: - group: "" resources: - nodes/status - pods/status userGroups: - 'system:nodes' verbs: - update - patch - level: Request omitStages: - RequestReceived users: - 'system:serviceaccount:kube-system:namespace-controller' verbs: - deletecollection - level: Metadata omitStages: - RequestReceived resources: - group: "" resources: - secrets - configmaps - group: authentication.k8s.io resources: - tokenreviews - level: Request omitStages: - RequestReceived resources: - group: "" - group: admissionregistration.k8s.io - group: apiextensions.k8s.io - group: apiregistration.k8s.io - group: apps - group: authentication.k8s.io - group: authorization.k8s.io - group: autoscaling - group: batch - group: certificates.k8s.io - group: extensions - group: metrics.k8s.io - group: networking.k8s.io - group: policy - group: rbac.authorization.k8s.io - group: scheduling.k8s.io - group: settings.k8s.io - group: storage.k8s.io verbs: - get - list - watch - level: RequestResponse omitStages: - RequestReceived resources: - group: "" - group: admissionregistration.k8s.io - group: apiextensions.k8s.io - group: apiregistration.k8s.io - group: apps - group: authentication.k8s.io - group: authorization.k8s.io - group: autoscaling - group: batch - group: certificates.k8s.io - group: extensions - group: metrics.k8s.io - group: networking.k8s.io - group: policy - group: rbac.authorization.k8s.io - group: scheduling.k8s.io - group: settings.k8s.io - group: storage.k8s.io - level: Metadata omitStages: - RequestReceived EOF

分发审计策略文件: 1 2 3 4 5 6 7 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp audit-policy.yaml root@${node_ip}:/etc/kubernetes/audit-policy.yaml done

创建后续访问 metrics-server 或 kube-prometheus使用的证书 创建证书签名请求: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 cd /opt /k8s/workcat > proxy-client-csr.json << EOF { "CN" :"aggregator" , "hosts" :[ ], "key" :{ "algo" :"rsa" , "size" :2048 }, "names" :[ { "C" :"CN" , "ST" :"BeiJing" , "L" :"BeiJing" , "O" :"k8s" , "OU" :"opsnull" } ] } EOF

CN 名称需要位于 kube-apiserver 的 –requestheader-allowed-names 参数中,否则后续访问 metrics 时会提示权限不足。

生成证书和私钥: 1 2 3 4 5 6 cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \ -ca-key=/etc/kubernetes/cert/ca-key.pem \ -config=/etc/kubernetes/cert/ca-config.json \ -profile=kubernetes proxy-client-csr.json | cfssljson -bare proxy-client ls proxy-client*.pem proxy-client-key.pem proxy-client.pem

将生成的证书和私钥文件拷贝到所有 master 节点: 1 2 3 4 5 6 source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp proxy-client*.pem root@${node_ip}:/etc/kubernetes/cert/ done

创建 kube-apiserver systemd unit 模板文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > kube-apiserver.service.template <<EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] WorkingDirectory=${K8S_DIR}/kube-apiserver ExecStart=/opt/k8s/bin/kube-apiserver \\ --advertise-address=##NODE_IP## \\ --default-not-ready-toleration-seconds=360 \\ --default-unreachable-toleration-seconds=360 \\ --feature-gates=DynamicAuditing=true \\ --max-mutating-requests-inflight=2000 \\ --max-requests-inflight=4000 \\ --default-watch-cache-size=200 \\ --delete-collection-workers=2 \\ --encryption-provider-config=/etc/kubernetes/encryption-config.yaml\\ --etcd-cafile=/etc/kubernetes/cert/ca.pem \\ --etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \\ --etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \\ --etcd-servers=${ETCD_ENDPOINTS} \\ --bind-address=##NODE_IP## \\ --secure-port=6443 \\ --tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \\ --tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \\ --insecure-port=0 \\ --audit-dynamic-configuration \\ --audit-log-maxage=15 \\ --audit-log-maxbackup=3 \\ --audit-log-maxsize=100 \\ --audit-log-truncate-enabled \\ --audit-log-path=${K8S_DIR}/kube-apiserver/audit.log \\ --audit-policy-file=/etc/kubernetes/audit-policy.yaml \\ --profiling \\ --anonymous-auth=false \\ --client-ca-file=/etc/kubernetes/cert/ca.pem \\ --enable-bootstrap-token-auth \\ --requestheader-allowed-names="aggregator" \\ --requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\ --requestheader-extra-headers-prefix="X-Remote-Extra-" \\ --requestheader-group-headers=X-Remote-Group \\ --requestheader-username-headers=X-Remote-User \\ --service-account-key-file=/etc/kubernetes/cert/ca.pem \\ --authorization-mode=Node,RBAC \\ --runtime-config=api/all=true \\ --enable-admission-plugins=NodeRestriction \\ --allow-privileged=true \\ --apiserver-count=3 \\ --event-ttl=168h \\ --kubelet-certificate-authority=/etc/kubernetes/cert/ca.pem \\ --kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \\ --kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \\ --kubelet-https=true \\ --kubelet-timeout=10s \\ --proxy-client-cert-file=/etc/kubernetes/cert/proxy-client.pem \\ --proxy-client-key-file=/etc/kubernetes/cert/proxy-client-key.pem \\ --service-cluster-ip-range=${SERVICE_CIDR} \\ --service-node-port-range=${NODE_PORT_RANGE} \\ --logtostderr=true \\ --enable-aggregator-routing=true \\ --v=2 Restart=on-failure RestartSec=10 Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

–advertise-address :apiserver 对外通告的 IP(kubernetes 服务后端节点IP);

–default-*-toleration-seconds :设置节点异常相关的阈值;

–max- -requests-inflight :请求相关的最大阈值;

–etcd-* :访问 etcd 的证书和 etcd 服务器地址;

–bind-address : https 监听的 IP,不能为 127.0.0.1 ,否则外界不能访问它的安全端口 6443;

–secret-port :https 监听端口;

–insecure-port=0 :关闭监听 http 非安全端口(8080);

–tls-*-file :指定 apiserver 使用的证书、私钥和 CA 文件;

–audit-* :配置审计策略和审计日志文件相关的参数;

–client-ca-file :验证 client (kue-controller-manager、kube-scheduler、kubelet、kube-proxy 等)请求所带的证书;

–enable-bootstrap-token-auth :启用 kubelet bootstrap 的 token 认证;

–requestheader-* :kube-apiserver 的 aggregator layer 相关的配置参数,proxy-client & HPA 需要使用;

–requestheader-client-ca-file :用于签名 –proxy-client-cert-file和 –proxy-client-key-file 指定的证书;在启用了 metric aggregator 时使用;

–requestheader-allowed-names :不能为空,值为逗号分割的 –proxy-client-cert-file 证书的 CN 名称,这里设置为 “aggregator”;

–service-account-key-file :签名 ServiceAccount Token 的公钥文件,kube-controller-manager 的 –service-account-private-key-file 指定私钥文件,两者配对使用;

–runtime-config=api/all=true : 启用所有版本的 APIs,如autoscaling/v2alpha1;

–authorization-mode=Node,RBAC 、 –anonymous-auth=false : 开启Node 和 RBAC 授权模式,拒绝未授权的请求;

–enable-admission-plugins :启用一些默认关闭的 plugins;

–allow-privileged :运行执行 privileged 权限的容器;

–apiserver-count=3 :指定 apiserver 实例的数量;

–event-ttl :指定 events 的保存时间;

–kubelet-* :如果指定,则使用 https 访问 kubelet APIs;需要为证书对应的用户(上面 kubernetes*.pem 证书的用户为 kubernetes) 用户定义 RBAC 规则,否则访问 kubelet API 时提示未授权;

–proxy-client- :apiserver 访问 metrics-server 使用的证书;

–service-cluster-ip-range : 指定 Service Cluster IP 地址段;

–service-node-port-range : 指定 NodePort 的端口范围;

如果 kube-apiserver 机器没有运行 kube-proxy,则还需要添加 –enable-aggregator-routing=true 参数;

注意:

–requestheader-client-ca-file 指定的 CA 证书,必须具有 client authand server auth ;

如果 –requestheader-allowed-names 不为空,且 –proxy-client-cert-file 证书的 CN 名称不在 allowed-names 中,则后续查看 node 或 pods 的metrics 失败,提示:

1 2 $ kubectl top nodes Error from server (Forbidden): nodes.metrics.k8s.io is forbidden:User "aggregator" cannot list resource "nodes" in API group "metrics.k8s.io" at the cluster scope

为各节点创建和分发 kube-apiserver systemd unit文件 替换模板文件中的变量,为各节点生成 systemd unit 文件: 1 2 3 4 5 6 7 cd /opt/k8s/work source /opt/k8s/bin/environment.sh for (( i=0; i < 3; i++ )) do sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-apiserver.service.template > kube-apiserver-${NODE_IPS[i]}.service done ls kube-apiserver*.service

NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发生成的 systemd unit 文件: 1 2 3 4 5 6 7 cd /opt/ k8s/work source /opt/ k8s/bin/ environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" scp kube-apiserver-${node_ip} .service root@${node_ip} :/etc/ systemd/system/ kube-apiserver.service done

启动 kube-apiserver 服务

1 2 3 4 5 6 7 source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "mkdir -p ${K8S_DIR}/kube-apiserver" ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-apiserver && systemctl restart kube-apiserver" done

检查 kube-apiserver 运行状态 1 2 3 4 5 6 source /opt/k8s/bin/environment.sh for node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip}" ssh root@${node_ip} "systemctl status kube-apiserver |grep 'Active:'" done

确保状态为 active (running) ,否则查看日志,确认原因:

1 journalctl -u kube-apiserver

检查集群状态 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 $ kubectl cluster-info Kubernetes master is running at https://k8s-api.virtual.local:6443 To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. $ kubectl get all --all-namespaces NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default service/kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 44m $ kubectl get componentstatuses NAME AGE controller-manager <unknown> scheduler <unknown> etcd-0 <unknown> etcd-2 <unknown> etcd-1 <unknown>

Kubernetes 1.16.6 存在 Bugs 导致返回结果一直为 ,但 kubectl get cs -o yaml 可以返回正确结果;

检查 kube-apiserver 监听的端口 1 2 $ sudo netstat -lnpt|grep kube tcp 0 0 172.27.138.251:6443 0.0.0.0:* LISTEN 101442/kube-apiserv

6443: 接收 https 请求的安全端口,对所有请求做认证和授权;

由于关闭了非安全端口,故没有监听 8080;

部署高可用 kube-controller-manager 集群 本文档介绍部署高可用 kube-controller-manager 集群的步骤。

该集群包含 3 个节点,启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用时,阻塞的节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

为保证通信安全,本文档先生成 x509 证书和私钥,kube-controller-manager 在如下两种情况下使用该证书:

与 kube-apiserver 的安全端口通信;

在安全端口(https,10252) 输出 prometheus 格式的 metrics;

注意:如果没有特殊指明,本文档的所有操作均在k8s-01 节点上执行。

创建 kube-controller-manager 证书和私钥 创建证书签名请求: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 cd /opt/k8s/work cat > kube-controller-manager-csr.json << EOF { "CN" : "system:kube-controller-manager" , "key" : { "algo" : "rsa" , "size" : 2048 } , "hosts" : [ "127.0.0.1" , "192.168.220.100" , "192.168.220.101" , "192.168.220.102" ] , "names" : [ { "C" : "CN" , "ST" : "BeiJing" , "L" : "BeiJing" , "O" : "system:kube-controller-manager" , "OU" : "opsnull" } ] } EOF

hosts 列表包含所有 kube-controller-manager 节点 IP;

CN 和 O 均为 system:kube-controller-manager ,kubernetes 内置的ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限。

生成证书和私钥: 1 2 3 4 5 6 7 cd /opt/k8s/workcfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager ls kube-controller-manager*pemkube-controller-manager-key.pem kube-controller-manager.pem

将生成的证书和私钥分发到所有 master 节点: 1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp kube-controller-manager*.pem root@${node_ip} :/etc/kubernetes/cert/ done

创建和分发 kubeconfig 文件 kube-controller-manager 使用 kubeconfig 文件访问 apiserver,该文件提供了 apiserver地址、嵌入的 CA 证书和 kube-controller-manager 证书等信息:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 cd /opt/k8s/worksource /opt/k8s/bin/environment.shkubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/work/ca.pem \ --embed-certs=true \ --server="${KUBE_APISERVER} " \ --kubeconfig=kube-controller-manager.kubeconfig kubectl config set-credentials system:kube-controller-manager \ --client-certificate=kube-controller-manager.pem \ --client-key=kube-controller-manager-key.pem \ --embed-certs=true \ --kubeconfig=kube-controller-manager.kubeconfig kubectl config set-context system:kube-controller-manager \ --cluster=kubernetes \ --user=system:kube-controller-manager \ --kubeconfig=kube-controller-manager.kubeconfig kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

kube-controller-manager 与 kube-apiserver 混布,故直接通过节点 IP 访问 kube-apiserver;

分发 kubeconfig 到所有 master 节点: 1 2 3 4 5 6 7 8 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " sed -e "s/##NODE_IP##/${node_ip} /" kube-controller-manager.kubeconfig > kube-controller-manager-${node_ip} .kubeconfig scp kube-controller-manager-${node_ip} .kubeconfig root@${node_ip} :/etc/kubernetes/kube-controller-manager.kubeconfig done

创建 kube-controller-manager systemd unit 模板文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 cd /opt/k8s/work source /opt/k8s/bin/environment.sh cat > kube-controller-manager.service.template <<EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] WorkingDirectory=${K8S_DIR}/kube-controller-manager ExecStart=/opt/k8s/bin/kube-controller-manager \\ --profiling \\ --cluster-name=kubernetes \\ --controllers=*,bootstrapsigner,tokencleaner \\ --kube-api-qps=1000 \\ --kube-api-burst=2000 \\ --leader-elect \\ --use-service-account-credentials\\ --concurrent-service-syncs=2 \\ --bind-address=##NODE_IP## \\ --secure-port=10252 \\ --tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \\ --tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \\ --port=0 \\ --authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\ --client-ca-file=/etc/kubernetes/cert/ca.pem \\ --requestheader-allowed-names="aggregator" \\ --requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\ --requestheader-extra-headers-prefix="X-Remote-Extra-" \\ --requestheader-group-headers=X-Remote-Group \\ --requestheader-username-headers=X-Remote-User \\ --authorization-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\ --cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \\ --cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \\ --experimental-cluster-signing-duration=876000h \\ --horizontal-pod-autoscaler-sync-period=10s \\ --concurrent-deployment-syncs=10 \\ --concurrent-gc-syncs=30 \\ --node-cidr-mask-size=24 \\ --service-cluster-ip-range=${SERVICE_CIDR} \\ --pod-eviction-timeout=6m \\ --terminated-pod-gc-threshold=10000 \\ --root-ca-file=/etc/kubernetes/cert/ca.pem \\ --service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \\ --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\ --logtostderr=true \\ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

--port=0 :关闭监听非安全端口(http),同时 --address 参数无效, --bind-address 参数有效;

--secure-port=10252 、 --bind-address=0.0.0.0 : 在所有网络接口监听10252 端口的 https /metrics 请求;

--kubeconfig :指定 kubeconfig 文件路径,kube-controller-manager 使用它连接和验证 kube-apiserver;

--authentication-kubeconfig 和 --authorization-kubeconfig :kube-controller-manager 使用它连接 apiserver,对 client 的请求进行认证和授权。 kube-controller-manager 不再使用 --tls-ca-file 对请求 httpsmetrics 的 Client 证书进行校验。如果没有配置这两个 kubeconfig 参数,则 client连接 kube-controller-manager https 端口的请求会被拒绝(提示权限不足)。

--cluster-signing-*-file :签名 TLS Bootstrap 创建的证书;

--experimental-cluster-signing-duration :指定 TLS Bootstrap 证书的有效期;

–root-ca-file :放置到容器 ServiceAccount 中的 CA 证书,用来对 kube-apiserver 的证书进行校验;

--service-account-private-key-file :签名 ServiceAccount 中 Token 的私钥文件,必须和 kube-apiserver 的 –service-account-key-file 指定的公钥文件配对使用;

--service-cluster-ip-range :指定 Service Cluster IP 网段,必须和 kube-apiserver 中的同名参数一致;

--leader-elect=true :集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态;

--controllers=*,bootstrapsigner,tokencleaner :启用的控制器列表,tokencleaner 用于自动清理过期的 Bootstrap token;

--horizontal-pod-autoscaler-* :custom metrics 相关参数,支持autoscaling/v2alpha1;

--tls-cert-file , --tls-private-key-file :使用 https 输出 metrics 时使用的 Server 证书和秘钥;

--use-service-account-credentials=true : kube-controller-manager 中各controller 使用 serviceaccount 访问 kube-apiserver;

为各节点创建和分发 kube-controller-manangersystemd unit 文件 替换模板文件中的变量,为各节点创建 systemd unit 文件:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor (( i=0 ; i < 3 ; i++ ))do sed -e "s/##NODE_IP##/${NODE_IPS[i]} /" kube-controller-manager.service.template > kube-controller-manager-${NODE_IPS[i]} .service done ls kube-controller-manager*.service

分发到所有 master 节点:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp kube-controller-manager-${node_ip} .service root@${node_ip} :/etc/systemd/system/kube-controller-manager.service done

启动 kube-controller-manager 服务 1 2 3 4 5 6 7 source /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "mkdir -p ${K8S_DIR} /kube-controller-manager" ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager" done

检查服务运行状态 1 2 3 4 5 6 source /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "systemctl status kube-controller-manager|grep Active" done

确保状态为 active (running) ,否则查看日志,确认原因:

1 journalctl -u kube-controller-manager

kube-controller-manager 监听 10252 端口,接收 https 请求:

1 2 $ sudo netstat -lnpt | grep kube-cont tcp 0 0 172.27.138.251:10252 0.0.0.0:* LISTEN 108977/kube-control

查看输出的 metrics 注意:以下命令在 kube-controller-manager 节点上执行。

1 $ curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://192.168.100:10252/metrics |head

我查看时没有输出

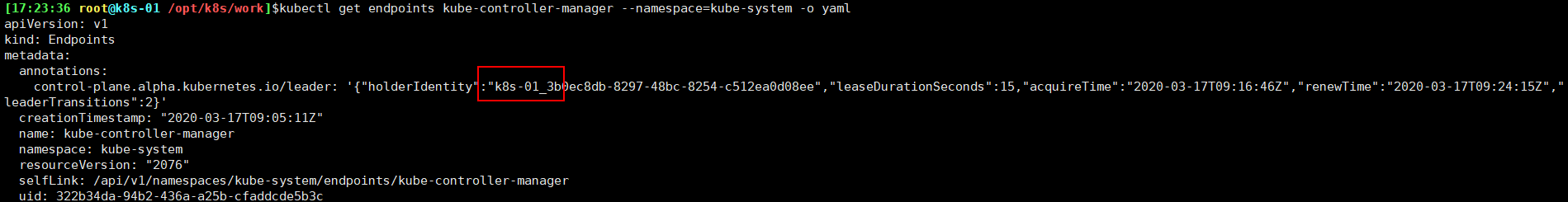

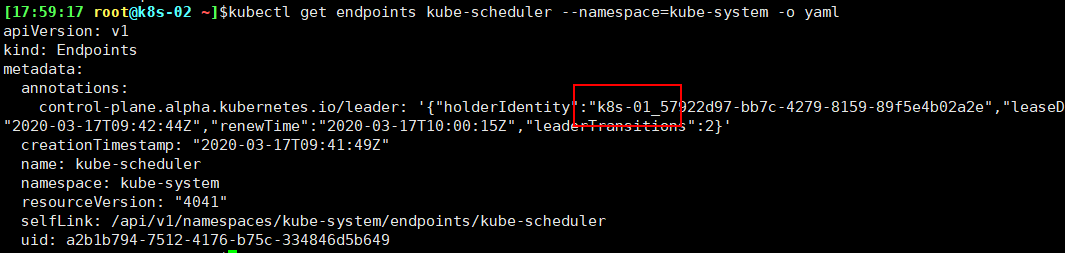

查看当前的 leader 1 $ kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

可见,当前的 leader 为 k8s-01 节点。

测试 kube-controller-manager 集群的高可用 停掉一个或两个节点的 kube-controller-manager 服务,观察其它节点的日志,看是否获取了 leader 权限。

参考

关于 controller 权限和 use-service-account-credentials 参数:https://github.com/kubernetes/kubernetes/issues/48208

kubelet 认证和授权:https://kubernetes.io/docs/admin/kubelet-authentication-authorization/#kubelet-authorization

部署高可用 kube-scheduler 集群 本文档介绍部署高可用 kube-scheduler 集群的步骤。

该集群包含 3 个节点,启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用后,剩余节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

为保证通信安全,本文档先生成 x509 证书和私钥,kube-scheduler 在如下两种情况下

与 kube-apiserver 的安全端口通信;

在安全端口(https,10251) 输出 prometheus 格式的 metrics;

注意:如果没有特殊指明,本文档的所有操作均在 k8s-01 节点上执行。

创建 kube-scheduler 证书和私钥 创建证书签名请求:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 cd /opt/k8s/workcat > kube-scheduler-csr.json << EOF { "CN":"system:kube-scheduler", "hosts":[ "127.0.0.1", "192.168.220.100", "192.168.220.101", "192.168.220.102" ], "key":{ "algo":"rsa", "size":2048 }, "names":[ { "C":"CN", "ST":"BeiJing", "L":"BeiJing", "O":"system:kube-scheduler", "OU":"opsnull" } ] } EOF

hosts 列表包含所有 kube-scheduler 节点 IP;

CN 和 O 均为 system:kube-scheduler ,kubernetes 内置的ClusterRoleBindings system:kube-scheduler 将赋予 kube-scheduler 工作所需的权限;

生成证书和私钥:

1 2 3 4 5 6 cd /opt/k8s/workcfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler ls kube-scheduler*pem

将生成的证书和私钥分发到所有 master 节点:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp kube-scheduler*.pem root@${node_ip} :/etc/kubernetes/cert/ done

创建和分发 kubeconfig 文件 kube-scheduler 使用 kubeconfig 文件访问 apiserver,该文件提供了 apiserver 地址、嵌入的 CA 证书和 kube-scheduler 证书:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 cd /opt/k8s/worksource /opt/k8s/bin/environment.shkubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/work/ca.pem \ --embed-certs=true \ --server="${KUBE_APISERVER} " \ --kubeconfig=kube-scheduler.kubeconfig kubectl config set-credentials system:kube-scheduler \ --client-certificate=kube-scheduler.pem \ --client-key=kube-scheduler-key.pem \ --embed-certs=true \ --kubeconfig=kube-scheduler.kubeconfig kubectl config set-context system:kube-scheduler \ --cluster=kubernetes \ --user=system:kube-scheduler \ --kubeconfig=kube-scheduler.kubeconfig kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

分发 kubeconfig 到所有 master 节点:

1 2 3 4 5 6 7 8 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " sed -e "s/##NODE_IP##/${node_ip} /" kube-scheduler.kubeconfig > kube-scheduler-${node_ip} .kubeconfig scp kube-scheduler-${node_ip} .kubeconfig root@${node_ip} :/etc/kubernetes/kube-scheduler.kubeconfig done

创建 kube-scheduler 配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 cd /opt/k8s/workcat >kube-scheduler.yaml.template <<EOF apiVersion: kubescheduler.config.k8s.io/v1alpha1 kind: KubeSchedulerConfiguration bindTimeoutSeconds: 600 clientConnection: burst: 200 kubeconfig: "/etc/kubernetes/kube-scheduler.kubeconfig" qps: 100 enableContentionProfiling: false enableProfiling: true hardPodAffinitySymmetricWeight: 1 healthzBindAddress: ##NODE_IP##:10251 leaderElection: leaderElect: true metricsBindAddress: ##NODE_IP##:10251 EOF

–kubeconfig :指定 kubeconfig 文件路径,kube-scheduler 使用它连接和验证kube-apiserver;

–leader-elect=true :集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态;

替换模板文件中的变量:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor (( i=0 ; i < 3 ; i++ ))do sed -e "s/##NODE_NAME##/${NODE_NAMES[i]} /" -e "s/##NODE_IP##/${NODE_IPS[i]} /" kube-scheduler.yaml.template > kube-scheduler-${NODE_IPS[i]} .yaml done ls kube-scheduler*.yaml

NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发 kube-scheduler 配置文件到所有 master 节点:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp kube-scheduler-${node_ip} .yaml root@${node_ip} :/etc/kubernetes/kube-scheduler.yaml done

重命名为 kube-scheduler.yaml;

创建 kube-scheduler systemd unit 模板文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > kube-scheduler.service.template <<EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] WorkingDirectory=${K8S_DIR}/kube-scheduler ExecStart=/opt/k8s/bin/kube-scheduler \\ --config=/etc/kubernetes/kube-scheduler.yaml \\ --bind-address=##NODE_IP## \\ --secure-port=10259 \\ --port=0 \\ --tls-cert-file=/etc/kubernetes/cert/kube-scheduler.pem \\ --tls-private-key-file=/etc/kubernetes/cert/kube-scheduler-key.pem \\ --authentication-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\ --client-ca-file=/etc/kubernetes/cert/ca.pem \\ --requestheader-allowed-names="" \\ --requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\ --requestheader-extra-headers-prefix="X-Remote-Extra-" \\ --requestheader-group-headers=X-Remote-Group \\ --requestheader-username-headers=X-Remote-User \\ --authorization-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\ --logtostderr=true \\ --v=2 Restart=always RestartSec=5 StartLimitInterval=0 [Install] WantedBy=multi-user.target EOF

为各节点创建和分发 kube-scheduler systemd unit文件 替换模板文件中的变量,为各节点创建 systemd unit 文件:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor (( i=0 ; i < 3 ; i++ ))do sed -e "s/##NODE_NAME##/${NODE_NAMES[i]} /" -e "s/##NODE_IP##/${NODE_IPS[i]} /" kube-scheduler.service.template > kube-scheduler-${NODE_IPS[i]} .service done ls kube-scheduler*.service

分发 systemd unit 文件到所有 master 节点:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp kube-scheduler-${node_ip} .service root@${node_ip} :/etc/systemd/system/kube-scheduler.service done

启动 kube-scheduler 服务 1 2 3 4 5 6 7 source /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "mkdir -p ${K8S_DIR} /kube-scheduler" ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-scheduler && systemctl restart kube-scheduler" done

检查服务运行状态 1 2 3 4 5 6 source /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "systemctl status kube-scheduler|grep Active" done

确保状态为 active (running) ,否则查看日志,确认原因:

1 journalctl -u kube-scheduler -f

查看输出的 metrics 注意:以下命令在 kube-scheduler 节点上执行。

kube-scheduler 监听 10251 和 10259 端口:

两个接口都对外提供 /metrics 和 /healthz 的访问。

1 2 3 $ sudo netstat -lnpt |grep kube-sch tcp 0 0 192.168.220.101:10251 0.0.0.0:* LISTEN 2102/kube-scheduler tcp 0 0 192.168.220.101:10259 0.0.0.0:* LISTEN 2102/kube-scheduler

1 2 3 4 5 6 7 8 9 10 11 $ curl -s http://192.168.220.100:10251/metrics |head apiserver_audit_event_total 0 apiserver_audit_requests_rejected_total 0 apiserver_client_certificate_expiration_seconds_bucket{le="0" } 0 apiserver_client_certificate_expiration_seconds_bucket{le="1800" } 0

1 $ curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://192.168.220.100:10259/metrics |head

本人没有输出

查看当前的 leader 1 $ kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml

可见,当前的 leader 为 k8s-01 节点。

测试 kube-scheduler 集群的高可用 随便找一个或两个 master 节点,停掉 kube-scheduler 服务,看其它节点是否获取了leader 权限。

部署 worker 节点 kubernetes worker 节点运行如下组件:

containerd

kubelet

kube-proxy

calico

kube-nginx

注意:如果没有特殊指明,本文档的所有操作均在k8s-01 节点上执行。

安装依赖包 1 2 3 4 5 6 7 source /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "yum install -y epel-release" & ssh root@${node_ip} "yum install -y chrony conntrack ipvsadm ipset jq iptables curl sysstat libseccomp wget socat git" & done

apiserver 高可用 本文档讲解使用 nginx 4 层透明代理功能实现 Kubernetes worker 节点组件高可用访问kube-apiserver 集群的步骤。

控制节点的 kube-controller-manager、kube-scheduler 是多实例部署且连接本机的kube-apiserver,所以只要有一个实例正常,就可以保证高可用;

集群内的 Pod 使用 K8S 服务域名 kubernetes 访问 kube-apiserver, kube-dns 会自动解析出多个 kube-apiserver 节点的 IP,所以也是高可用的;

在每个节点起一个 nginx 进程,后端对接多个 apiserver 实例,nginx 对它们做健康检查和负载均衡;

下载和编译 nginx 下载源码:

1 2 3 cd /opt/k8s/workwget http://nginx.org/download/nginx-1.15.3.tar.gz tar -xzvf nginx-1.15.3.tar.gz

配置编译参数: 1 2 3 4 cd /opt/k8s/work/nginx-1.15.3mkdir nginx-prefixyum install -y gcc make ./configure --with-stream --without-http --prefix=$(pwd )/nginx-prefix --without-http_uwsgi_module --without-http_scgi_module --without-http_fastcgi_module

–with-stream :开启 4 层透明转发(TCP Proxy)功能;

–without-xxx :关闭所有其他功能,这样生成的动态链接二进制程序依赖最小;

编译和安装: 1 2 cd /opt/k8s/work/nginx-1.15.3make && make install

验证编译的 nginx 1 2 3 cd /opt/k8s/work/nginx-1.15.3./nginx-prefix/sbin/nginx -v nginx version: nginx/1.15.3

安装和部署 nginx 创建目录结构: 1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "mkdir -p /opt/k8s/kube-nginx/{conf,logs,sbin}" done

拷贝二进制程序: 1 2 3 4 5 6 7 8 9 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "mkdir -p /opt/k8s/kube-nginx/{conf,logs,sbin}" scp /opt/k8s/work/nginx-1.15.3/nginx-prefix/sbin/nginx root@${node_ip} :/opt/k8s/kube-nginx/sbin/kube-nginx ssh root@${node_ip} "chmod a+x /opt/k8s/kube-nginx/sbin/*" done

配置 nginx,开启 4 层透明转发功能: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 cd /opt/k8s/workcat > kube-nginx.conf << \EOFworker_processes 1; events { worker_connections 1024; } stream { upstream backend { hash $remote_addr consistent; server 172.27.138.251:6443 max_fails=3 fail_timeout=30s; server 172.27.137.229:6443 max_fails=3 fail_timeout=30s; server 172.27.138.239:6443 max_fails=3 fail_timeout=30s; } server { listen 127.0.0.1:8443; proxy_connect_timeout 1s; proxy_pass backend; } } EOF

upstream backend 中的 server 列表为集群中各 kube-apiserver 的节点 IP,需要根据实际情况修改;

分发配置文件:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp kube-nginx.conf root@${node_ip} :/opt/k8s/kube-nginx/conf/kube-nginx.conf done

配置 systemd unit 文件,启动服务 配置 kube-nginx systemd unit 文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cd /opt/k8s/workcat > kube-nginx.service <<EOF [Unit] Description=kube-apiserver nginx proxy After=network.target After=network-online.target Wants=network-online.target [Service] Type=forking ExecStartPre=/opt/k8s/kube-nginx/sbin/kube-nginx -c /opt/k8s/kube-ngi nx/conf/kube-nginx.conf -p /opt/k8s/kube-nginx -t ExecStart=/opt/k8s/kube-nginx/sbin/kube-nginx -c /opt/k8s/kube-nginx/ conf/kube-nginx.conf -p /opt/k8s/kube-nginx ExecReload=/opt/k8s/kube-nginx/sbin/kube-nginx -c /opt/k8s/kube-nginx /conf/kube-nginx.conf -p /opt/k8s/kube-nginx -s reload PrivateTmp=true Restart=always RestartSec=5 StartLimitInterval=0 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

分发 systemd unit 文件: 1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp kube-nginx.service root@${node_ip} :/etc/systemd/system/ done

启动 kube-nginx 服务: 1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-nginx && systemctl restart kube-nginx" done

检查 kube-nginx 服务运行状态 1 2 3 4 5 6 7 8 9 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "systemctl status kube-nginx |grep 'Active:'" done journalctl -u kube-nginx

部署 containerd 组件 containerd 实现了 kubernetes 的 Container Runtime Interface (CRI) 接口,提供容器运行时核心功能,如镜像管理、容器管理等,相比 dockerd 更加简单、健壮和可移植。

注意:

如果没有特殊指明,本文档的所有操作均在 k8s01 节点上执行。

如果想使用 docker,请参考附件 F.部署docker.md;

docker 需要与 flannel 配合使用,且先安装 flannel;

下载和分发二进制文件 下载二进制文件:

1 2 3 4 5 cd /opt/k8s/workwget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.17.0/crictl-v1.17.0-linux-amd64.tar.gz \ https://github.com/opencontainers/runc/releases/download/v1.0.0-rc10/runc.amd64 \ https://github.com/containernetworking/plugins/releases/download/v0.8.5/cni-plugins-linux-amd64-v0.8.5.tgz \ https://github.com/containerd/containerd/releases/download/v1.3.3/containerd-1.3.3.linux-amd64.tar.gz

解压:

1 2 3 4 5 6 7 cd /opt/k8s/workmkdir containerdtar -xvf containerd-1.3.3.linux-amd64.tar.gz -C containerd tar -xvf crictl-v1.17.0-linux-amd64.tar.gz mkdir cni-pluginssudo tar -xvf cni-plugins-linux-amd64-v0.8.5.tgz -C cni-plugins sudo mv runc.amd64 runc

分发二进制文件到所有 worker 节点:

1 2 3 4 5 6 7 8 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp containerd/bin/* crictl cni-plugins/* runc root@${node_ip} :/opt/k8s/bin ssh root@${node_ip} "chmod a+x /opt/k8s/bin/* && mkdir -p /etc/cni/net.d" done

创建和分发 containerd 配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat << EOF | sudo tee containerd-config.toml version = 2 root = "${CONTAINERD_DIR}/root" state = "${CONTAINERD_DIR}/state" [plugins] [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "registry.cn-beijing.aliyuncs.com/images_k8s/pause-amd64:3.1" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/k8s/bin" conf_dir = "/etc/cni/net.d" [plugins."io.containerd.runtime.v1.linux"] shim = "containerd-shim" runtime = "runc" runtime_root = "" no_shim = false shim_debug = false EOF

1 2 3 4 5 6 7 8 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "mkdir -pv /etc/containerd/ ${CONTAINERD_DIR} /{root,state}" scp containerd-config.toml root@${node_ip} :/etc/containerd/config.toml done

创建 containerd systemd unit 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cd /opt/k8s/workcat <<EOF | sudo tee containerd.service [Unit] Description=containerd container runtime Documentation=https://containerd.io After=network.target [Service] Environment="PATH=/opt/k8s/bin:/bin:/sbin:/usr/bin:/usr/sbin" ExecStartPre=/sbin/modprobe overlay ExecStart=/opt/k8s/bin/containerd Restart=always RestartSec=5 Delegate=yes KillMode=process OOMScoreAdjust=-999 LimitNOFILE=1048576 LimitNPROC=infinity LimitCORE=infinity [Install] WantedBy=multi-user.target EOF

分发 systemd unit 文件,启动 containerd 服务 1 2 3 4 5 6 7 8 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp containerd.service root@${node_ip} :/etc/systemd/system ssh root@${node_ip} "systemctl enable containerd && systemctl restart containerd" done

创建和分发 crictl 配置文件 crictl 是兼容 CRI 容器运行时的命令行工具,提供类似于 docker 命令的功能。具体参考官方文档。

1 2 3 4 5 6 7 cd /opt/k8s/workcat << EOF | sudo tee crictl.yaml runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF

分发到所有 worker 节点:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " scp crictl.yaml root@${node_ip} :/etc/crictl.yaml done

部署 kubelet 组件 kubelet 运行在每个 worker 节点上,接收 kube-apiserver 发送的请求,管理 Pod 容器,执行交互式命令,如 exec、run、logs 等。

kubelet 启动时自动向 kube-apiserver 注册节点信息,内置的 cadvisor 统计和监控节点的资源使用情况。

为确保安全,部署时关闭了 kubelet 的非安全 http 端口,对请求进行认证和授权,拒绝未授权的访问(如 apiserver、heapster 的请求)。

注意:如果没有特殊指明,本文档的所有操作均在 zhangjun-k8s-01 节点上执行。

下载和分发 kubelet 二进制文件 参考 05-1.部署master节点。

创建 kubelet bootstrap kubeconfig 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_name in ${NODE_NAMES[@]} do echo ">>> ${node_name} " export BOOTSTRAP_TOKEN=$(kubeadm token create \--description kubelet-bootstrap-token \ --groups system:bootstrappers:${node_name} \ --kubeconfig ~/.kube/config) kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/cert/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kubelet-bootstrap-${node_name} .kubeconfig kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=kubelet-bootstrap-${node_name} .kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=kubelet-bootstrap-${node_name} .kubeconfig kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name} .kubeconfig done

向 kubeconfig 写入的是 token,bootstrap 结束后 kube-controller-manager 为kubelet 创建 client 和 server 证书;

查看 kubeadm 为各节点创建的 token:

1 2 3 4 5 6 $ kubeadm token list --kubeconfig ~/.kube/config TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS 6uruzm.8nd2ni8sbc090k9w 23h 2020-03-20T21:03:19+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:k8s-02 fegl8v.1xnn1q1zqmtrt8k7 23h 2020-03-20T21:03:18+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:k8s-01 vti5zp.vfz3eeag70rhdhcg 23h 2020-03-20T21:03:19+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:k8s-03

token 有效期为 1 天,超期后将不能再被用来 boostrap kubelet,且会被 kube-controller-manager 的 tokencleaner 清理;

kube-apiserver 接收 kubelet 的 bootstrap token 后,将请求的 user 设置为system:bootstrap: ,group 设置为 system:bootstrappers ,后续将为这个 group 设置 ClusterRoleBinding;

分发 bootstrap kubeconfig 文件到所有 worker 节点 1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_name in ${NODE_NAMES[@]} do echo ">>> ${node_name} " scp kubelet-bootstrap-${node_name} .kubeconfig root@${node_name} :/etc/kubernetes/kubelet-bootstrap.kubeconfig done

创建和分发 kubelet 参数配置文件 从 v1.10 开始,部分 kubelet 参数需在配置文件中配置, kubelet –help 会提示:

DEPRECATED: This parameter should be set via the config file specified by the Kubelet's --config flag

创建 kubelet 参数配置文件模板(可配置项参考代码中注释):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 cd /opt/k8s/work source /opt/k8s/bin/environment.sh cat > kubelet-config.yaml.template <<EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: "##NODE_IP##" staticPodPath: "" syncFrequency: 1m fileCheckFrequency: 20s httpCheckFrequency: 20s staticPodURL: "" port: 10250 readOnlyPort: 0 rotateCertificates: true serverTLSBootstrap: true authentication: anonymous: enabled: false webhook: enabled: true x509: clientCAFile: "/etc/kubernetes/cert/ca.pem" authorization: mode: Webhook registryPullQPS: 0 registryBurst: 20 eventRecordQPS: 0 eventBurst: 20 enableDebuggingHandlers: true enableContentionProfiling: true healthzPort: 10248 healthzBindAddress: "##NODE_IP##" clusterDomain: "${CLUSTER_DNS_DOMAIN}" clusterDNS: - "${CLUSTER_DNS_SVC_IP}" nodeStatusUpdateFrequency: 10s nodeStatusReportFrequency: 1m imageMinimumGCAge: 2m imageGCHighThresholdPercent: 85 imageGCLowThresholdPercent: 80 volumeStatsAggPeriod: 1m kubeletCgroups: "" systemCgroups: "" cgroupRoot: "" cgroupsPerQOS: true cgroupDriver: cgroupfs runtimeRequestTimeout: 10m hairpinMode: promiscuous-bridge maxPods: 220 podCIDR: "${CLUSTER_CIDR}" podPidsLimit: -1 resolvConf: /etc/resolv.conf maxOpenFiles: 1000000 kubeAPIQPS: 1000 kubeAPIBurst: 2000 serializeImagePulls: false evictionHard: memory.available: "100Mi" nodefs.available: "10%" nodefs.inodesFree: "5%" imagefs.available: "15%" evictionSoft: {}enableControllerAttachDetach: true failSwapOn: true containerLogMaxSize: 20Mi containerLogMaxFiles: 10 systemReserved: {}kubeReserved: {}systemReservedCgroup: "" kubeReservedCgroup: "" enforceNodeAllocatable: ["pods" ]EOF

address:kubelet 安全端口(https,10250)监听的地址,不能为 127.0.0.1,否则kube-apiserver、heapster 等不能调用 kubelet 的 API;

readOnlyPort=0:关闭只读端口(默认 10255),等效为未指定;

authentication.anonymous.enabled:设置为 false,不允许匿名访问 10250 端口;

authentication.x509.clientCAFile:指定签名客户端证书的 CA 证书,开启 HTTP 证书认证;

authentication.webhook.enabled=true:开启 HTTPs bearer token 认证;

对于未通过 x509 证书和 webhook 认证的请求(kube-apiserver 或其他客户端),将被拒绝,提示 Unauthorized;

authroization.mode=Webhook:kubelet 使用 SubjectAccessReview API 查询kube-apiserver 某 user、group 是否具有操作资源的权限(RBAC);

featureGates.RotateKubeletClientCertificate、featureGates.RotateKubeletServerCertificate:自动 rotate 证书,证书的有效期取决于 kube-controller-manager 的 –experimental-cluster-signing-duration 参数;

需要 root 账户运行;

为各节点创建和分发 kubelet 配置文件:

1 2 3 4 5 6 7 8 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " sed -e "s/##NODE_IP##/${node_ip} /" kubelet-config.yaml.template > kubelet-config-${node_ip} .yaml.template scp kubelet-config-${node_ip} .yaml.template root@${node_ip} :/etc/kubernetes/kubelet-config.yaml done

创建和分发 kubelet systemd unit 文件 创建 kubelet systemd unit 文件模板:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > kubelet.service.template <<EOF [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=containerd.service Requires=containerd.service [Service] WorkingDirectory=${K8S_DIR}/kubelet ExecStart=/opt/k8s/bin/kubelet \\ --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \\ --cert-dir=/etc/kubernetes/cert \\ --network-plugin=cni \\ --cni-conf-dir=/etc/cni/net.d \\ --container-runtime=remote \\ --container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\ --root-dir=${K8S_DIR}/kubelet \\ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\ --config=/etc/kubernetes/kubelet-config.yaml \\ --hostname-override=##NODE_NAME## \\ --image-pull-progress-deadline=15m \\ --volume-plugin-dir=${K8S_DIR}/kubelet/kubelet-plugins/volume/exec/ \\ --logtostderr=true \\ --v=2 Restart=always RestartSec=5 StartLimitInterval=0 [Install] WantedBy=multi-user.target EOF

如果用docker,注释这两行,或者改成docker

After=containerd.service

如果设置了 –hostname-override 选项,则 kube-proxy 也需要设置该选项,否则会出现找不到 Node 的情况;

–bootstrap-kubeconfig :指向 bootstrap kubeconfig 文件,kubelet 使用该文件中的用户名和 token 向 kube-apiserver 发送 TLS Bootstrapping 请求;

K8S approve kubelet 的 csr 请求后,在 –cert-dir 目录创建证书和私钥文件,然后写入 –kubeconfig 文件;

–pod-infra-container-image 不使用 redhat 的 pod-infrastructure:latest 镜像,它不能回收僵尸容器;

为各节点创建和分发 kubelet systemd unit 文件:

1 2 3 4 5 6 7 8 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_name in ${NODE_NAMES[@]} do echo ">>> ${node_name} " sed -e "s/##NODE_NAME##/${node_name} /" kubelet.service.template > kubelet-${node_name} .service scp kubelet-${node_name} .service root@${node_name} :/etc/systemd/system/kubelet.service done

授予 kube-apiserver 访问 kubelet API 的权限 在执行 kubectl exec、run、logs 等命令时,apiserver 会将请求转发到 kubelet 的 https端口。这里定义 RBAC 规则,授权 apiserver 使用的证书(kubernetes.pem)用户名(CN:kuberntes-master)访问 kubelet API 的权限:

1 kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes-master

Bootstrap Token Auth 和授予权限 kubelet 启动时查找 –kubeletconfig 参数对应的文件是否存在,如果不存在则使用–bootstrap-kubeconfig 指定的 kubeconfig 文件向 kube-apiserver 发送证书签名请求 (CSR)。

kube-apiserver 收到 CSR 请求后,对其中的 Token 进行认证,认证通过后将请求的user 设置为 system:bootstrap: ,group 设置为system:bootstrappers ,这一过程称为 Bootstrap Token Auth 。

默认情况下,这个 user 和 group 没有创建 CSR 的权限,kubelet 启动失败,错误日志如下:

1 2 3 4 5 6 7 8 9 $ sudo journalctl -u kubelet -a |grep -A 2 'certificatesigningrequests' May 26 12:13:41 zhangjun-k8s-01 kubelet[128468]: I0526 12:13:41.79823 0 128468 certificate_manager.go:366] Rotating certificates May 26 12:13:41 zhangjun-k8s-01 kubelet[128468]: E0526 12:13:41.80199 7 128468 certificate_manager.go:385] Failed while requesting a signe d certificate from the master: cannot create certificate signing requ est: certificatesigningrequests.certificates.k8s.io is forbidden: Use r "system:bootstrap:82jfrm" cannot create resource "certificatesignin grequests" in API group "certificates.k8s.io" at the cluster scope

解决办法是:创建一个 clusterrolebinding,将 group system:bootstrappers 和clusterrole system:node-bootstrapper 绑定:

1 kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

出现 –random-fully解决 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 3月 18 10:46:05 k8s-03 kubelet[2630]: I0318 10:46:05.992018 2630 kubelet_network_linux.go:111] Not using `--random-fully` in the MASQUERADE rule for iptables because the local version of iptables does not support it yum install gcc make libnftnl-devel libmnl-devel autoconf automake libtool bison flex libnetfilter_conntrack-devel libnetfilter_queue-devel libpcap-devel export LC_ALL=Cwget https://www.netfilter.org/projects/iptables/files/iptables-1.6.2.tar.bz2 tar -xvf iptables-1.6.2.tar.bz2 cd iptables-1.6.2 ./autogen.sh ./configure make -j4 make install systemctl restart kubelet.service

自动 approve CSR 请求,生成 kubelet client 证书 kubelet 创建 CSR 请求后,下一步需要创建被 approve,有两种方式:

kube-controller-manager 自动 aprrove; 手动使用命令 kubectl certificate approve ;

CSR 被 approve 后,kubelet 向 kube-controller-manager 请求创建 client 证书,kube-controller-manager 中的 csrapproving controller 使用 SubjectAccessReview API来检查 kubelet 请求(对应的 group 是 system:bootstrappers)是否具有相应的权限。创建三个 ClusterRoleBinding,分别授予 group system:bootstrappers 和 groupsystem:nodes 进行 approve client、renew client、renew server 证书的权限(servercsr 是手动 approve 的,见后文):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 cd /opt/k8s/work cat > csr-crb.yaml <<EOF kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: auto-approve-csrs-for-group subjects: - kind: Group name: system:bootstrappers apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: system:certificates.k8s.io:certificatesigningrequests:nodeclient apiGroup: rbac.authorization.k8s.io --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: node-client-cert-renewal subjects: - kind: Group name: system:nodes apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient apiGroup: rbac.authorization.k8s.io --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: approve-node-server-renewal-csr rules: - apiGroups: ["certificates.k8s.io" ] resources: ["certificatesigningrequests/selfnodeserver" ] verbs: ["create" ] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: node-server-cert-renewal subjects: - kind: Group name: system:nodes apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: approve-node-server-renewal-csr apiGroup: rbac.authorization.k8s.io EOF kubectl apply -f csr-crb.yaml

auto-approve-csrs-for-group:自动 approve node 的第一次 CSR; 注意第一次CSR 时,请求的 Group 为 system:bootstrappers; node-client-cert-renewal:自动 approve node 后续过期的 client 证书,自动生成的证书 Group 为 system:nodes; node-server-cert-renewal:自动 approve node 后续过期的 server 证书,自动生成的证书 Group 为 system:nodes;

启动 kubelet 服务 1 2 3 4 5 6 7 8 source /opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]} do echo ">>> ${node_ip} " ssh root@${node_ip} "mkdir -p ${K8S_DIR} /kubelet/kubelet-plugins/volume/exec/" ssh root@${node_ip} "/usr/sbin/swapoff -a" ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet" done

kubelet 启动后使用 –bootstrap-kubeconfig 向 kube-apiserver 发送 CSR 请求,当这个CSR 被 approve 后,kube-controller-manager 为 kubelet 创建 TLS 客户端证书、私钥和 –kubeletconfig 文件。

注意:kube-controller-manager 需要配置 –cluster-signing-cert-file 和 –cluster-signing-key-file 参数,才会为 TLS Bootstrap 创建证书和私钥。

查看 kubelet 情况 稍等一会,三个节点的 CSR 都被自动 approved:

1 2 3 4 5 6 7 8 $ kubectl get csr NAME AGE REQUESTOR CONDITION csr-5rwzm 43s system:node:zhangjun-k8s-01 Pending csr-65nms 55s system:bootstrap:2sb8wy Approved,Issued csr-8t5hj 42s system:node:zhangjun-k8s-02 Pending csr-jkhhs 41s system:node:zhangjun-k8s-03 Pending csr-jv7dn 56s system:bootstrap:ta7onm Approved,Issued csr-vb6p5 54s system:bootstrap:xk27zp Approved,Issued

Pending 的 CSR 用于创建 kubelet server 证书,需要手动 approve,参考后文。

所有节点均注册(NotReady 状态是预期的,后续安装了网络插件后就好):

1 2 3 4 5 $ kubectl get node NAME STATUS ROLES AGE VERSION zhangjun-k8s-01 NotReady <none> 10h v1.16.6 zhangjun-k8s-02 NotReady <none> 10h v1.16.6 zhangjun-k8s-03 NotReady <none> 10h v1.16.6

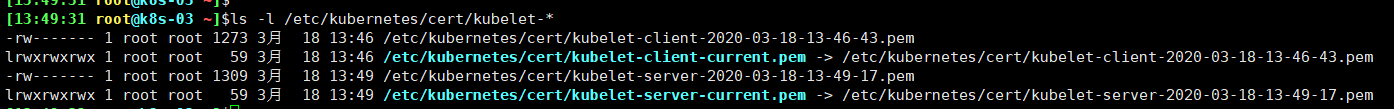

kube-controller-manager 为各 node 生成了 kubeconfig 文件和公私钥:

1 2 3 4 5 6 $ ls -l /etc/kubernetes/kubelet.kubeconfig -rw------- 1 root root 2246 Feb 7 15:38 /etc/kubernetes/kubelet.kubeconfig $ ls -l /etc/kubernetes/cert/kubelet-client-* -rw------- 1 root root 1281 Feb 7 15:38 /etc/kubernetes/cert/kubelet-client-2020-02-07-15-38-21.pem lrwxrwxrwx 1 root root 59 Feb 7 15:38 /etc/kubernetes/cert/kubelet-client-current.pem -> /etc/kubernetes/cert/kubelet-client-2020-02-07-15-38-21.pem

没有自动生成 kubelet server 证书;

手动 approve server cert csr 基于安全性考虑,CSR approving controllers 不会自动 approve kubelet server 证书签名请求,需要手动 approve:

1 2 3 4 5 6 7 8 9 10 11 12 $ kubectl get csr NAME AGE REQUESTOR CONDITION csr-5rwzm 3m22s system:node:zhangjun-k8s-01 Pending csr-65nms 3m34s system:bootstrap:2sb8wy Approved,Issued csr-8t5hj 3m21s system:node:zhangjun-k8s-02 Pending csr-jkhhs 3m20s system:node:zhangjun-k8s-03 Pending csr-jv7dn 3m35s system:bootstrap:ta7onm Approved,Issued csr-vb6p5 3m33s system:bootstrap:xk27zp Approved,Issued $ $ kubectl get csr | grep Pending | awk '{print $1}' | xargs kubectl certificate approve $ $ ls -l /etc/kubernetes/cert/kubelet-*

kubelet api 认证和授权 kubelet 配置了如下认证参数:

authentication.anonymous.enabled:设置为 false,不允许匿名访问 10250 端口;

authentication.x509.clientCAFile:指定签名客户端证书的 CA 证书,开启 HTTPs证书认证;

authentication.webhook.enabled=true:开启 HTTPs bearer token 认证;

同时配置了如下授权参数:

authroization.mode=Webhook:开启 RBAC 授权;

kubelet 收到请求后,使用 clientCAFile 对证书签名进行认证,或者查询 bearer token是否有效。如果两者都没通过,则拒绝请求,提示 Unauthorized :

1 2 3 4 $ curl -s --cacert /etc/kubernetes/cert/ca.pem https://192.168.220.102:10250/metrics Unauthorized $ curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer 123456" https://192.168.220.102:10250/metrics Unauthorized

证书认证和授权 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 $ $ curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /etc/kubernetes/cert/kube-controller-manager.pem --key /etc/kubernetes/cert/kube-controller-manager-key.pem https://192.168.220.102:10250/metrics Forbidden (user=system:kube-controller-manager, verb=get, resource=nodes, subresource=metrics) $ $ curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://192.168.220.102:10250/metrics|head apiserver_audit_event_total 0 apiserver_audit_requests_rejected_total 0 apiserver_client_certificate_expiration_seconds_bucket{le="0" } 0 apiserver_client_certificate_expiration_seconds_bucket{le="1800" } 0

–cacert 、 –cert 、 –key 的参数值必须是文件路径,如上面的./admin.pem 不能省略 ./ ,否则返回 401 Unauthorized ;

bear token 认证和授权 创建一个 ServiceAccount,将它和 ClusterRole system:kubelet-api-admin 绑定,从而具有调用 kubelet API 的权限:

1 2 3 4 5 kubectl create sa kubelet-api-test kubectl create clusterrolebinding kubelet-api-test --clusterrole=system:kubelet-api-admin --serviceaccount=default:kubelet-api-test SECRET=$(kubectl get secrets | grep kubelet-api-test | awk '{print $1}' ) TOKEN=$(kubectl describe secret ${SECRET} | grep -E '^token' | awk '{print $2}' ) echo ${TOKEN}

1 2 3 4 5 6 7 8 9 10 11 $ curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer ${TOKEN} " https://192.168.220.102:10250/metrics | head apiserver_audit_event_total 0 apiserver_audit_requests_rejected_total 0 apiserver_client_certificate_expiration_seconds_bucket{le="0" } 0 apiserver_client_certificate_expiration_seconds_bucket{le="1800" } 0

cadvisor 和 metrics cadvisor 是内嵌在 kubelet 二进制中的,统计所在节点各容器的资源(CPU、内存、磁盘、网卡)使用情况的服务。

浏览器访问 https://192.168.20.102:10250/metrics 和https://192.168.220.102:10250/metrics/cadvisor 分别返回 kubelet 和 cadvisor 的metrics。

注意:

kubelet.config.json 设置 authentication.anonymous.enabled 为 false,不允许匿名证书访问 10250 的 https 服务;

参考

kubelet 认证和授权:https://kubernetes.io/docs/reference/command-line-tools-reference/kubelet-authentication-authorization/

部署 kube-proxy 组件 kube-proxy 运行在所有 worker 节点上,它监听 apiserver 中 service 和 endpoint 的变化情况,创建路由规则以提供服务 IP 和负载均衡功能。

本文档讲解部署 ipvs 模式的 kube-proxy 过程。

注意:如果没有特殊指明,本文档的所有操作均在 k8s-01 节点上执行,然后远程分发文件和执行命令。

下载和分发 kube-proxy 二进制文件 参考 05-1.部署master节点.md。

创建 kube-proxy 证书 创建证书签名请求:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 cd /opt/k8s/work cat > kube-proxy-csr.json << EOF { "CN" : "system:kube-proxy" , "key" : { "algo" : "rsa" , "size" : 2048 } , "names" : [ { "C" : "CN" , "ST" : "BeiJing" , "L" : "BeiJing" , "O" : "k8s" , "OU" : "opsnull" } ] } EOF

CN:指定该证书的 User 为 system:kube-proxy ;

预定义的 RoleBinding system:node-proxier 将User system:kube-proxy 与Role system:node-proxier 绑定,该 Role 授予了调用 kube-apiserverProxy 相关 API 的权限;

该证书只会被 kube-proxy 当做 client 证书使用,所以 hosts 字段为空;

生成证书和私钥:

1 2 3 4 5 6 cd /opt/k8s/workcfssl gencert -ca=/opt/k8s/work/ca.pem \ -ca-key=/opt/k8s/work/ca-key.pem \ -config=/opt/k8s/work/ca-config.json \ -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy ls kube-proxy*

创建和分发 kubeconfig 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cd /opt/k8s/worksource /opt/k8s/bin/environment.shkubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/work/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=kube-proxy.pem \ --client-key=kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

分发 kubeconfig 文件:

1 2 3 4 5 6 7 cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor node_name in ${NODE_NAMES[@]} do echo ">>> ${node_name} " scp kube-proxy.kubeconfig root@${node_name} :/etc/kubernetes/ done

创建 kube-proxy 配置文件 从 v1.10 开始,kube-proxy 部分参数可以配置文件中配置。可以使用 –write-config-to 选项生成该配置文件,或者参考 源代码的注释。

创建 kube-proxy config 文件模板:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > kube-proxy-config.yaml.template <<EOF kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 clientConnection: burst: 200 kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig" qps: 100 bindAddress: ##NODE_IP## healthzBindAddress: ##NODE_IP##:10256 metricsBindAddress: ##NODE_IP##:10249 enableProfiling: true clusterCIDR: ${CLUSTER_CIDR} hostnameOverride: ##NODE_NAME## mode: "ipvs" portRange: "" iptables: masqueradeAll: false ipvs: scheduler: rr excludeCIDRs: [] EOF

bindAddress : 监听地址;

clientConnection.kubeconfig : 连接 apiserver 的 kubeconfig 文件;

clusterCIDR : kube-proxy 根据 –cluster-cidr 判断集群内部和外部流量,指定 –cluster-cidr 或 –masquerade-all 选项后 kube-proxy 才会对访问Service IP 的请求做 SNAT;

hostnameOverride : 参数值必须与 kubelet 的值一致,否则 kube-proxy 启动后会找不到该 Node,从而不会创建任何 ipvs 规则;

mode : 使用 ipvs 模式;

为各节点创建和分发 kube-proxy 配置文件: